ControlNet et T2I-Adapter - Exemples de workflow ComfyUI

Notez que dans ces exemples, l’image brute est directement transmise à l’adaptateur ControlNet/T2I.

Chaque adaptateur ControlNet/T2I nécessite que l’image qui lui est transmise soit dans un format spécifique comme les cartes de profondeur, les cartes de contours, etc., selon le modèle spécifique pour obtenir de bons résultats.

Le nœud ControlNetApply ne convertira pas les images régulières en cartes de profondeur, cartes de contours, etc. pour vous. Vous devrez le faire séparément ou en utilisant des nœuds pour prétraiter vos images que vous pouvez trouver : Ici

Vous pouvez trouver les derniers fichiers de modèle controlnet ici : Version originale ou version fp16 safetensors plus petite

Pour SDXL, stability.ai a publié des Control Loras que vous pouvez trouver Ici (rang 256) ou Ici (rang 128). Ils sont utilisés exactement de la même manière (mettez-les dans le même répertoire) que les fichiers de modèle ControlNet réguliers.

Les fichiers de modèle ControlNet vont dans le répertoire ComfyUI/models/controlnet.

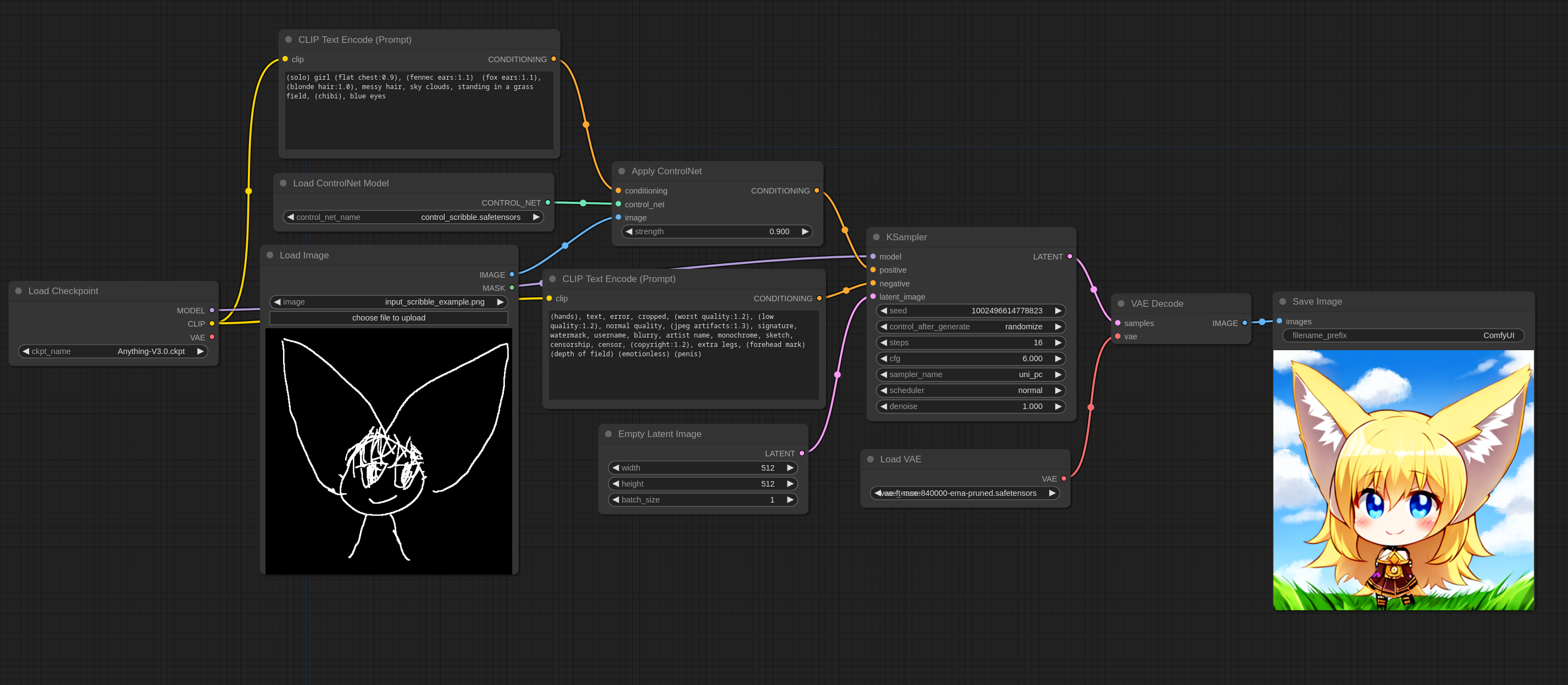

Scribble ControlNet

Voici un exemple simple de comment utiliser les controlnets, cet exemple utilise le scribble controlnet et le modèle AnythingV3. Vous pouvez charger cette image dans ComfyUI pour obtenir le flux de travail complet.

Voici l’image d’entrée que j’ai utilisée pour ce flux de travail :

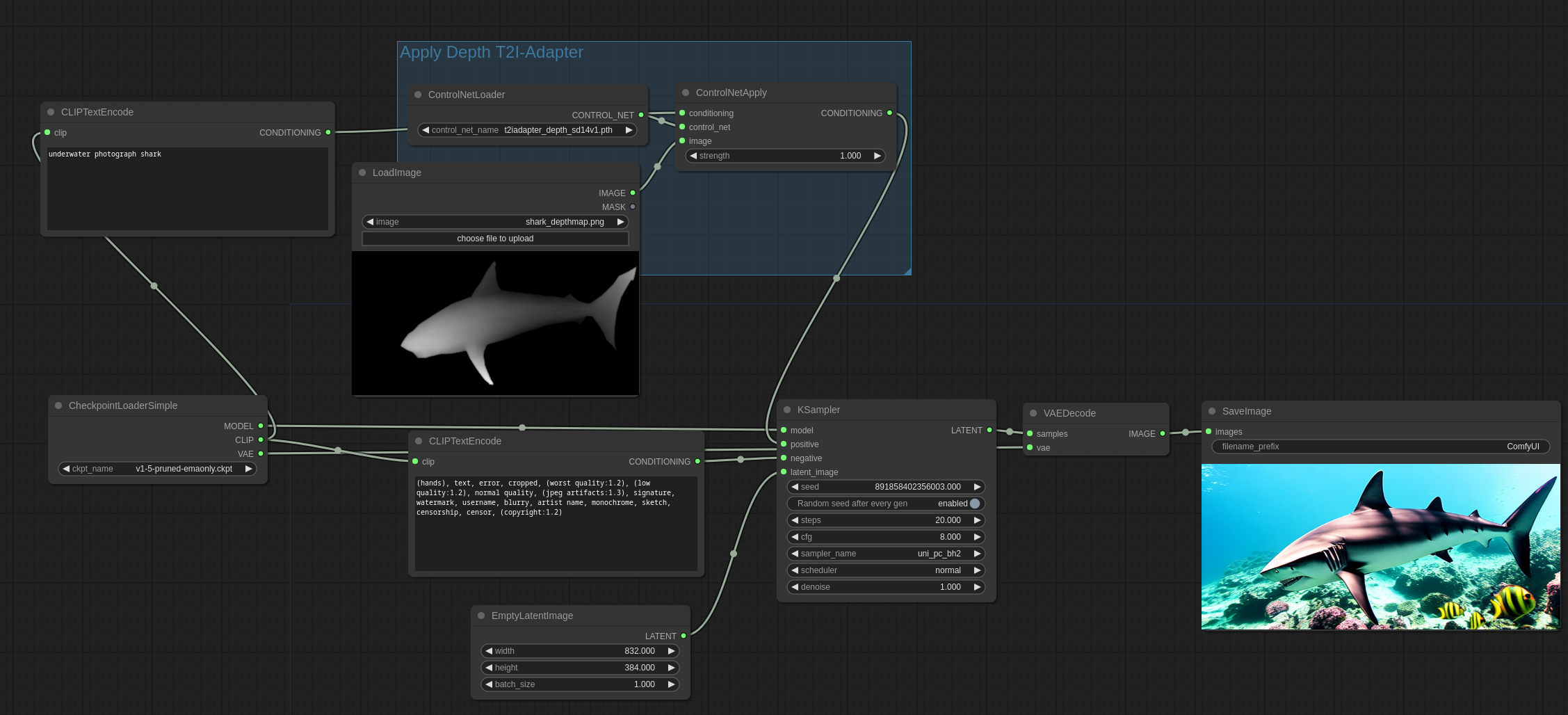

T2I-Adapter vs ControlNets

Les T2I-Adapters sont beaucoup plus efficaces que les ControlNets, je les recommande donc fortement. Les ControlNets ralentiront considérablement la vitesse de génération tandis que les T2I-Adapters n’ont presque aucun impact négatif sur la vitesse de génération.

Dans les ControlNets, le modèle ControlNet est exécuté une fois à chaque itération. Pour le T2I-Adapter, le modèle s’exécute une fois au total.

Les T2I-Adapters sont utilisés de la même manière que les ControlNets dans ComfyUI : en utilisant le nœud ControlNetLoader.

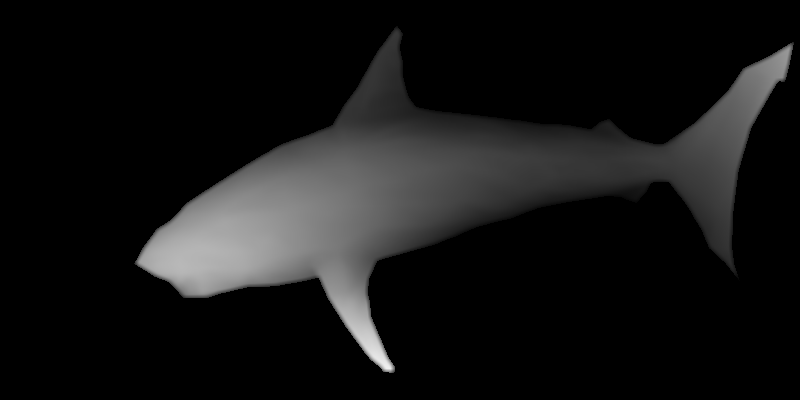

Voici l’image d’entrée qui sera utilisée dans cet exemple source :

Voici comment vous utilisez le T2I-Adapter de profondeur :

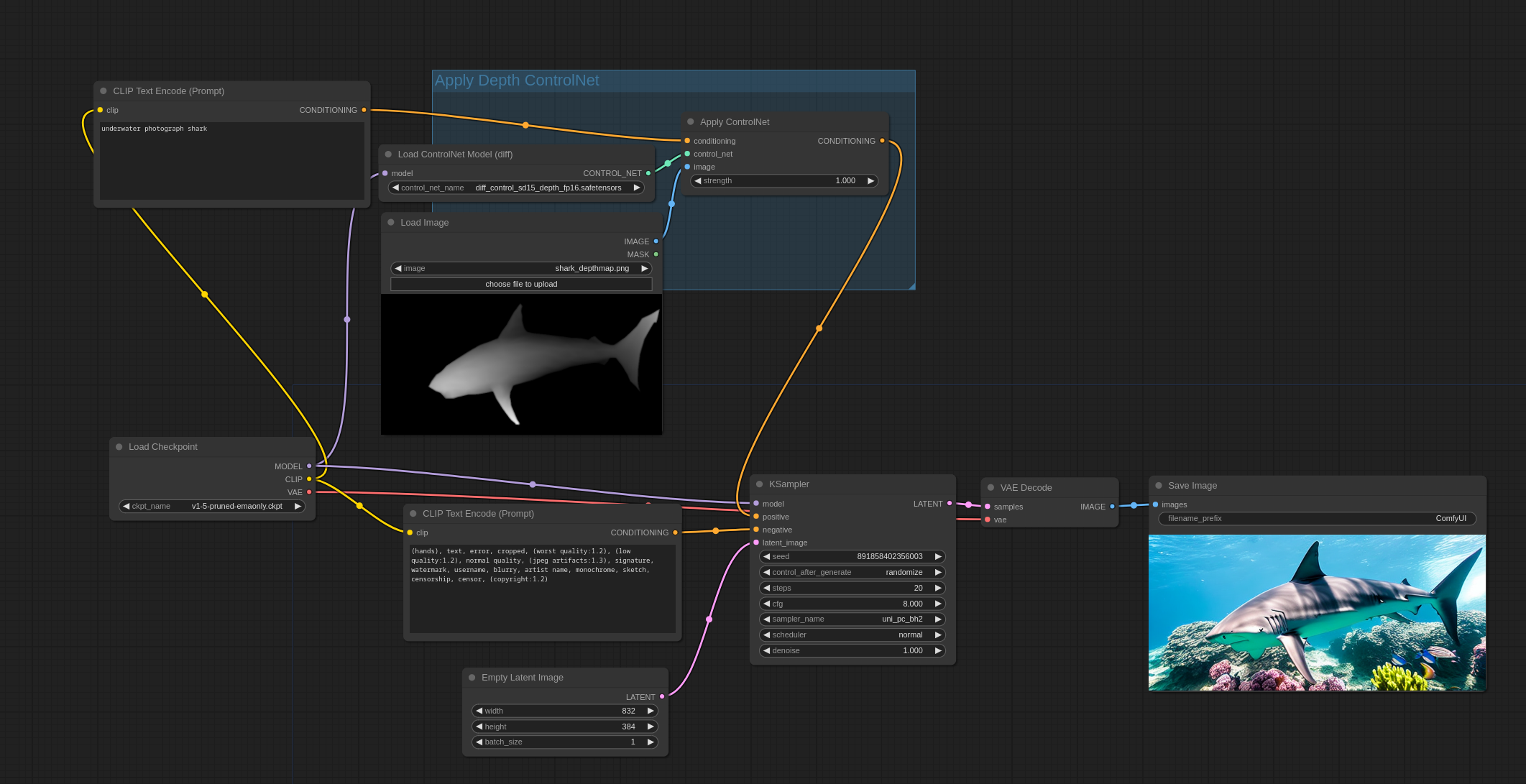

Voici comment vous utilisez le Controlnet de profondeur. Notez que cet exemple utilise le nœud DiffControlNetLoader car le controlnet utilisé est un diff control net. Les diff controlnets nécessitent que les poids d’un modèle soient chargés correctement. Le nœud DiffControlNetLoader peut également être utilisé pour charger des modèles controlnet réguliers. Lors du chargement de modèles controlnet réguliers, il se comportera de la même manière que le nœud ControlNetLoader.

Vous pouvez charger ces images dans ComfyUI pour obtenir le flux de travail complet.

Pose ControlNet

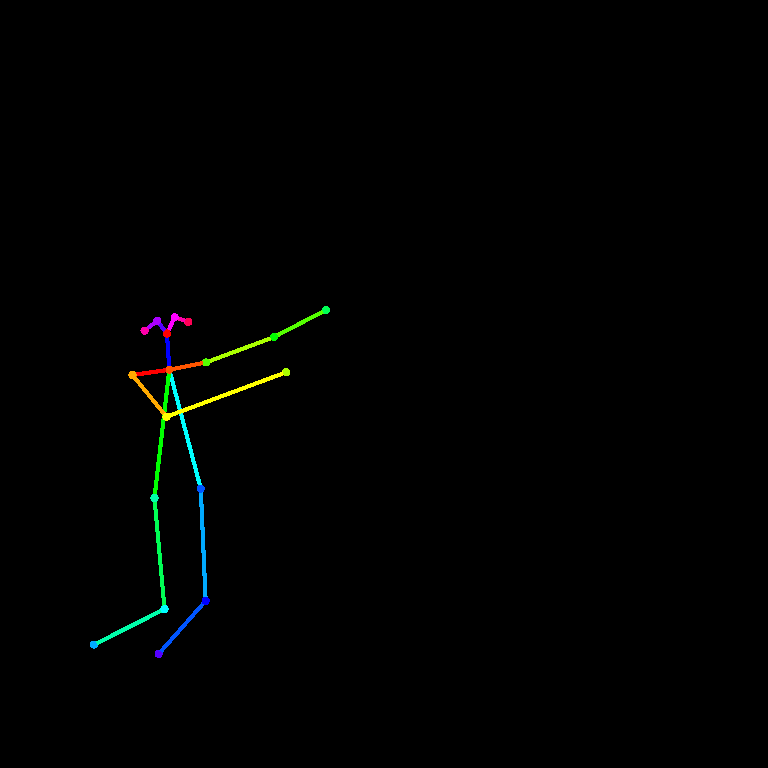

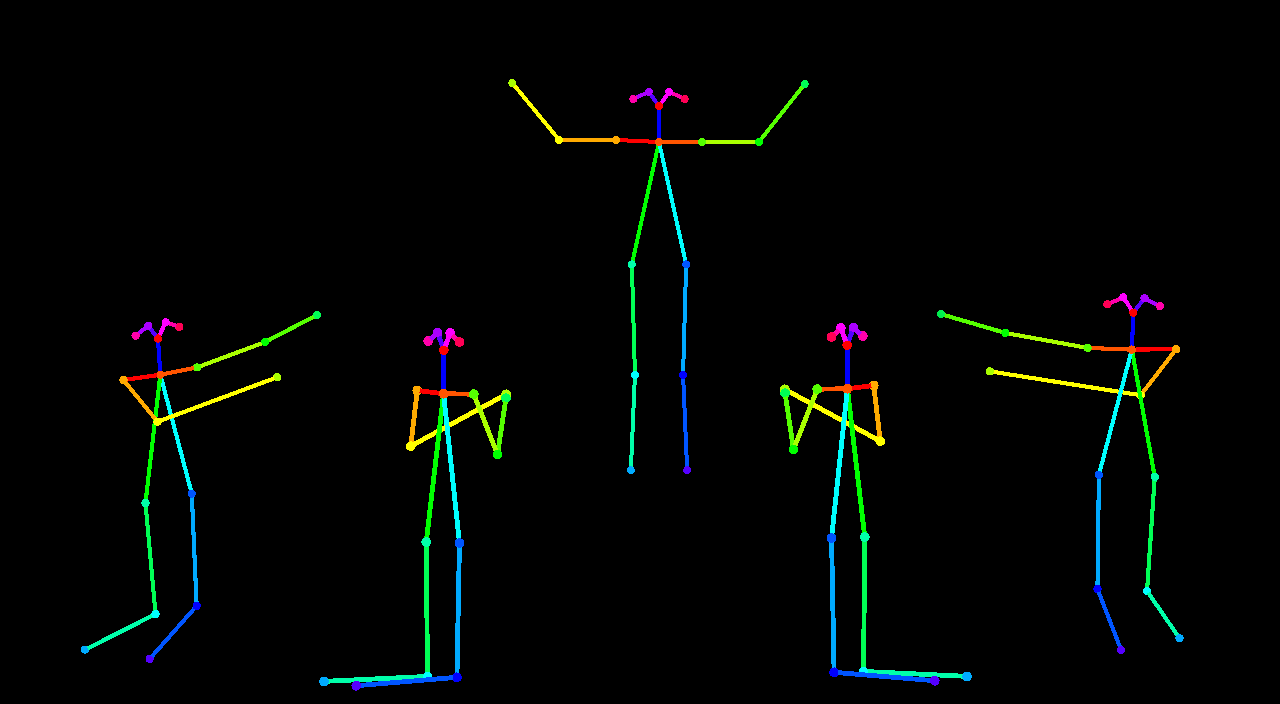

Voici l’image d’entrée qui sera utilisée dans cet exemple :

Voici un exemple utilisant un premier passage avec AnythingV3 avec le controlnet et un second passage sans le controlnet avec AOM3A3 (abyss orange mix 3) et en utilisant leur VAE.

Vous pouvez charger cette image dans ComfyUI pour obtenir le flux de travail complet.

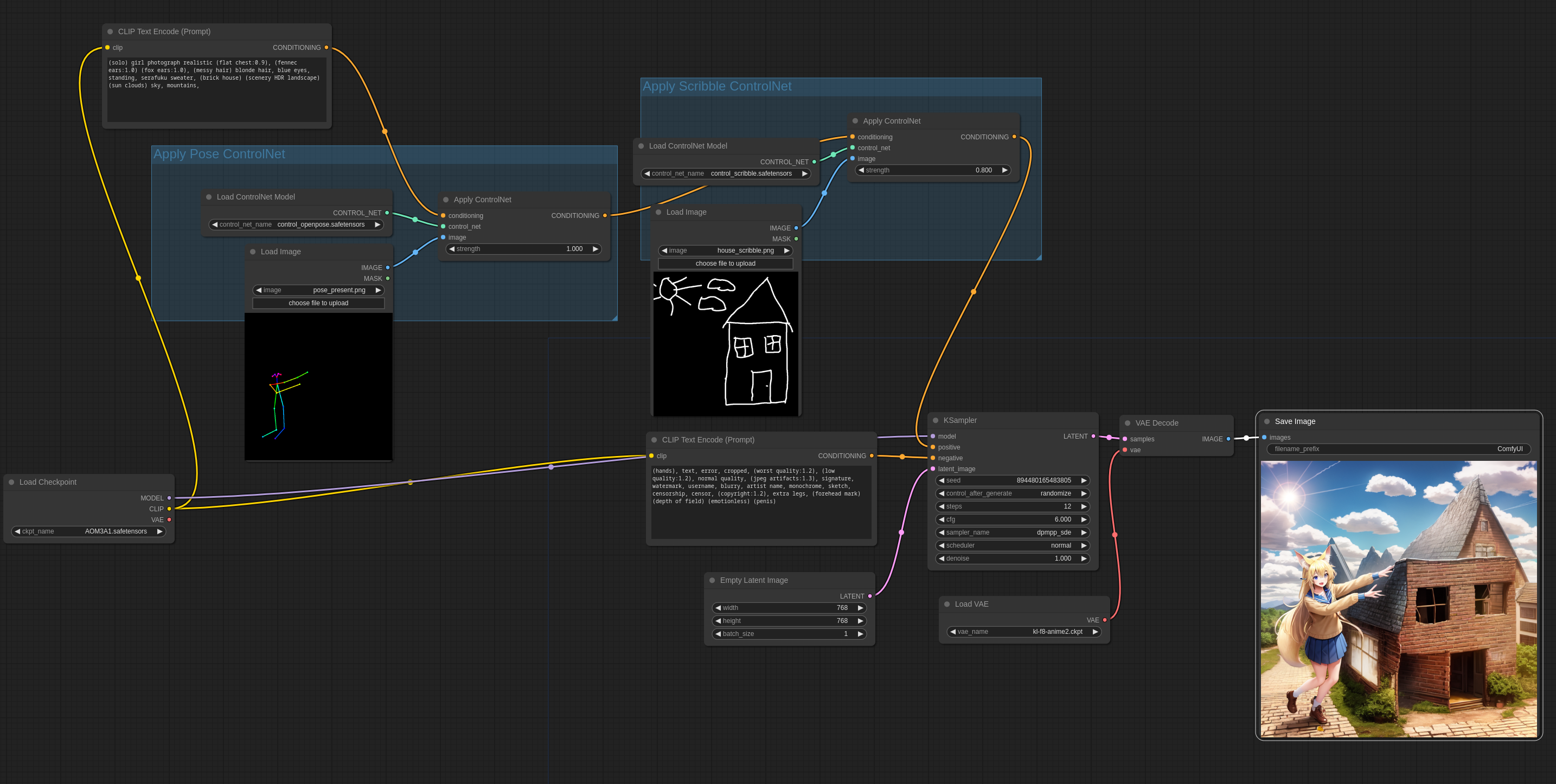

Mixing ControlNets

Plusieurs ControlNets et T2I-Adapters peuvent être appliqués de cette manière avec des résultats intéressants :

Vous pouvez charger cette image dans ComfyUI pour obtenir le flux de travail complet.

Images d’entrée :