AIGC Latest News

Stay up to date with latest AIGC news and updates.

OpenMOSS Releases MOVA - Open-Source Synchronized Video and Audio Generation Model

OpenMOSS team releases MOVA (MOSS Video and Audio), an end-to-end synchronized video and audio generation foundation model that generates video and audio in a single inference pass, achieving precise lip-sync and environment-aware sound effects, fully open-sourcing model weights, training and inference code

Alibaba Tongyi Lab Releases Z-Image-Base - Non-Distilled High-Quality Image Generation Model

Alibaba Tongyi Lab releases Z-Image-Base, the non-distilled foundation model of the Z-Image series, preserving full generative potential and providing an ideal base for community fine-tuning and custom development

DeepSeek Releases DeepSeek-OCR-2 - Document Understanding Model with Visual Causal Flow

DeepSeek open-sources DeepSeek-OCR-2, introducing the new DeepEncoder V2 vision encoder that mimics human reading logic through visual causal flow mechanism, achieving 91.09% accuracy on OmniDocBench v1.5

Moonshot AI Releases Kimi K2.5 - 1T Parameter Native Multimodal Agent Model

Moonshot AI officially releases Kimi K2.5, a 1T parameter native multimodal agent model, continually pre-trained on 15 trillion mixed visual and text tokens, supporting image and video understanding with Agent Swarm autonomous collaboration mechanism

Alibaba Qwen Releases Qwen3-TTS - 97ms Ultra-Low Latency Voice Synthesis Model

Alibaba Qwen open-sources Qwen3-TTS series voice generation models, achieving 97ms ultra-low latency through Dual-Track modeling, supporting 3-second voice cloning and natural language voice design, covering 10 major languages

Microsoft Releases VibeVoice-ASR - Speech Recognition Model Supporting 60-Minute Long Audio Single-Pass Processing

Microsoft open-sources VibeVoice-ASR, a 9B parameter unified speech recognition model capable of processing up to 60 minutes of audio in a single pass, jointly completing recognition, speaker diarization, and timestamping in one inference process

NVIDIA Releases PersonaPlex-7B-v1 - Full-Duplex Voice Dialogue Model

NVIDIA open-sources PersonaPlex-7B-v1, a 7 billion parameter full-duplex speech-to-speech dialogue model based on Moshi architecture, supporting simultaneous listening and speaking, natural interruptions, and role customization with interruption response latency as low as 240 milliseconds

SVI 2.0 Pro Released - Infinite-Length Video Generation with Wan 2.2 Support

Stable Video Infinity 2.0 Pro has been officially released, adding support for the Wan 2.2 base model, providing HIGH and LOW versions of LoRA models for generating infinite-length video content in ComfyUI

Qwen-Image-Layered Released - Image Generation Model with Layer-Based Editing Support

Qwen-Image-Layered can decompose images into multiple RGBA layers, with each layer independently editable without affecting other content, supporting operations like recoloring, replacement, deletion, resizing, and repositioning

Microsoft Releases TRELLIS.2 - 4 Billion Parameter Image-to-3D Generation Model

Microsoft introduces TRELLIS.2, a 4 billion parameter large 3D generative model that converts images to high-quality 3D assets in seconds, with full PBR materials and complex topology support

Alibaba Cloud PAI Releases Z-Image-Turbo-Fun-Controlnet-Union - Multi-Condition ControlNet Model

Alibaba Cloud PAI team releases Z-Image-Turbo-Fun-Controlnet-Union, a ControlNet model supporting multiple control conditions including Canny, HED, Depth, Pose, and MLSD, trained at 1328 resolution

Alibaba AIDC-AI Releases Ovis-Image - 7B Text-to-Image Model Optimized for Text Rendering

Alibaba AIDC-AI team releases Ovis-Image, a 7B parameter text-to-image model focused on high-quality text rendering, achieving excellent results on multiple text rendering benchmarks while running efficiently on a single high-end GPU

Alibaba Tongyi Lab Releases Z-Image-Turbo - Efficient 6B Parameter Image Generation Model

Alibaba Tongyi Lab releases Z-Image-Turbo, an efficient 6B parameter image generation model that produces high-quality images in just 8 sampling steps and runs smoothly on consumer GPUs with 16GB VRAM

ByteDance Releases Sa2VA: First Unified Image-Video Understanding Model

ByteDance introduces the Sa2VA multimodal model, combining SAM2 and LLaVA technologies to achieve dense segmentation and visual question answering for both images and videos, attaining top performance on multiple benchmarks

Tencent Open Sources Hunyuan Image 3.0 - World's Largest Open-Source Text-to-Image Model

Tencent releases Hunyuan Image 3.0, the first open-source commercial-grade native multimodal image generation model with a total of 80B parameters, featuring world knowledge reasoning capabilities and supporting complex semantic understanding of thousands of characters

Nunchaku Releases 4-Bit Lightning Qwen-Image-Edit-2509 Model

Nunchaku team releases optimized version of 4-Bit Lightning Qwen-Image-Edit-2509 model, supporting 4/8 step fast inference, running smoothly on 8GB VRAM environment

Alibaba Releases Wan-Animate Model - Unified Character Animation and Replacement Technology

Alibaba Tongyi Lab launches Wan-Animate, a unified character animation framework based on Wan2.2, supporting character animation generation and video character replacement, with open-sourced model weights and inference code

Comfy Cloud - Effortless ComfyUI Access in the Cloud

Comfy Cloud provides easy access to ComfyUI with powerful GPUs, popular models, and custom nodes. Join the private beta for free cloud-based ComfyUI usage.

Tencent HunyuanWorld Voyager: Generating 3D World Exploration Videos from a Single Image

Tencent Hunyuan team releases Voyager technology, capable of generating world-consistent 3D point cloud sequence videos from a single image and user-defined camera paths, supporting infinite world exploration and direct 3D reconstruction

ByteDance Releases USO: Unified Style and Subject-Driven Image Generation Model

ByteDance launches the USO model, capable of freely combining any subject with any style while maintaining subject consistency and achieving high-quality style transfer effects

Wan2.2-S2V: Audio-Driven Video Generation Model Released

Wan2.2-S2V is an AI video generation model that can convert static images and audio into videos, supporting dialogue, singing, and performance content creation needs.

ComfyUI Challenge #1: Join & Win $100

Join the first ComfyUI Weekly Challenge and win $100! Create a video turning around the cat man character using the provided depth map.

InfiniteTalk Open Source Release - Audio-Driven Video Generation with Unlimited Length Support

The MeiGen-AI team has open-sourced the InfiniteTalk model, enabling precise lip-sync and unlimited-length video generation. It supports both image-to-video and video-to-video conversion, marking a breakthrough in digital human technology.

ComfyUI Subgraph Feature Now Officially Released

ComfyUI officially releases the Subgraph feature, allowing users to package complex node combinations into single reusable subgraph nodes, greatly improving workflow modularity and manageability.

Qwen-Image gets native support in ComfyUI

Qwen-Image is a 20B-parameter MMDiT image model focused on complex text rendering and precise editing; it is now available natively in ComfyUI. This brief summarizes key capabilities, license, and resources.

Tencent Hunyuan Team Open Sources MixGRPO Framework for Enhanced Human Preference Alignment Training Efficiency

Tencent Hunyuan team releases open-source MixGRPO framework, the first to integrate sliding-window mixed ODE-SDE sampling for GRPO, achieving up to 71% training speedup for human preference alignment in diffusion and flow models.

Black Forest Labs Releases FLUX.1 Krea [dev] Open Source Version with ComfyUI Native Support

Black Forest Labs and Krea collaboration officially releases FLUX.1 Krea [dev] model, the best open-source FLUX model for text-to-image generation. ComfyUI has implemented native support, allowing users to experience the latest text-to-image generation technology.

WAN2.2 Open Source Version Released and ComfyUI Native Support in Day 0

WAN team officially releases Wan2.2 open source version, featuring innovative MoE architecture that brings significant quality improvements to video generation. ComfyUI has achieved native support, allowing users to directly experience the latest video generation technology.

ByteDance Open Sources Seed-X 7B: A Compact Translation Model Supporting 28 Languages

ByteDance has open-sourced the Seed-X 7B model, designed specifically for translation tasks. It supports translation between 28 languages and is suitable for integration in various scenarios such as AI image generation.

PUSA V1.0: Low-Cost, High-Performance Video Generation Model Released

PUSA V1.0 leverages innovative VTA technology to achieve high-quality, multi-task video generation with minimal data and cost, supporting image-to-video, keyframe generation, video extension, text-to-video, and more.

AMAP Releases FLUX-Text: A New Approach to Scene Text Editing

The AMAP team releases FLUX-Text, a new diffusion-based scene text editing method supporting multilingual, style consistency, and high-fidelity text editing.

OmniAvatar: Release of Efficient Audio-Driven Virtual Human Video Generation Model

OmniAvatar model is now open source, supporting audio-driven full-body virtual human video generation with natural movements and rich expressions, suitable for podcasts, interactions, dynamic scenes, and various applications.

Tongyi Lab Releases ThinkSound: A New Paradigm for Multimodal Audio Generation and Editing

Tongyi Lab open-sources ThinkSound, the first Any2Audio unified audio generation and editing framework supporting chain reasoning, enabling multimodal inputs including video, text, and audio, with high-fidelity, strong synchronization, and interactive editing capabilities.

XVerse Released: A High-Consistency Image Generation Model with Multi-Subject Identity and Semantic Attribute Control

ByteDance open-sources XVerse model, enabling precise independent control of multiple subject identities and semantic attributes (like pose, style, lighting), enhancing AI image generation's personalization and complex scene capabilities.

Flux.1 Kontext Dev Open Source Version Released with Native ComfyUI Support

Black Forest Labs releases Flux.1 Kontext Dev open source version, a 12B parameter diffusion transformer model, with immediate native support in ComfyUI

OmniGen2 Released: Unified Image Understanding and Generation Model with Natural Language Instructions

VectorSpaceLab releases OmniGen2, a powerful multimodal generation model that supports precise local image editing through natural language instructions, including object removal and replacement, style transfer, background processing, and more

NVIDIA Releases UniRelight - A Diffusion-Based Universal Relighting Technology

NVIDIA research team introduces UniRelight, a diffusion-based universal relighting technology that achieves high-quality relighting effects through a single image or video

National University of Singapore Releases OmniConsistency - Achieving Image Stylization Consistency at Low Cost

NUS team introduces OmniConsistency project, achieving GPT-4o level image stylization consistency with only 2,600 image pairs and 500 hours of GPU training, now supports ComfyUI nodes

Black Forest Labs Releases FLUX.1 Kontext: Context-Aware Image Editing Model Suite

Black Forest Labs launches FLUX.1 Kontext series models, the first to achieve context-aware editing based on text and image inputs, supporting character consistency preservation and localized editing features

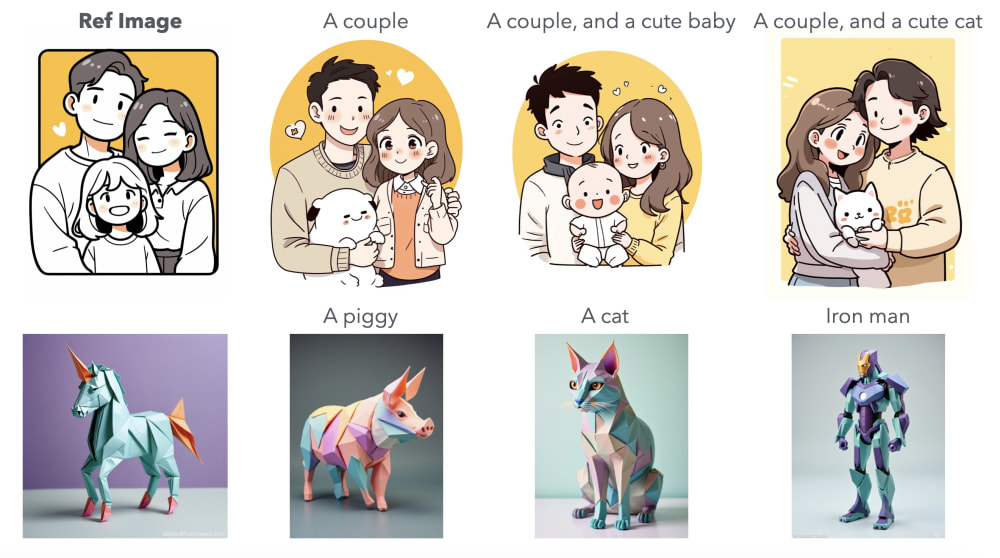

ID-Patch: A New Method for Multi-Identity Personalized Group Photo Generation

ByteDance and Michigan State University jointly propose ID-Patch method, achieving multi-identity personalized image generation with improved identity preservation and generation efficiency, suitable for group photos, advertisements and other multi-character scenarios.

Tencent Open Sources Speech-Driven Digital Human Model HunyuanVideo-Avatar: Generate Natural Digital Human Videos from a Single Image and Audio

Tencent releases HunyuanVideo-Avatar, enabling high-fidelity, emotion-controllable digital human videos from images and audio, suitable for short videos, e-commerce ads, and more.

IndexTTS 1.5 Release: High-Quality Chinese and English Text-to-Speech Model

IndexTTS releases version 1.5 with significantly improved model stability and English performance, supporting pinyin pronunciation correction and punctuation-controlled pauses, outperforming mainstream TTS systems in multiple tests

StepFun Open Sources Step1X-3D - High-Fidelity 3D Asset Generation Framework

StepFun releases Step1X-3D open source framework that generates high-quality 3D geometry and textures from single images, including 2M high-quality dataset, complete training code and model weights

Update Notice: Custom Nodes Affecting ComfyUI Frontend

After recent ComfyUI updates, some custom nodes may cause frontend issues. This article summarizes affected plugins and solutions, recommending timely updates or uninstallation of relevant plugins.

Step1X-3D Releases High-Quality 3D Asset Generation and Open Source Plan

The Step1X-3D team has released a high-fidelity 3D asset generation solution, making models, datasets, and code open source, supporting various 3D asset and texture generation tasks.

Tencent Releases HunyuanCustom Multimodal Video Generation System

Tencent introduces HunyuanCustom, a multimodal video customization framework that supports text, image, audio, and video conditions, enabling highly consistent video generation across multiple scenarios

Insert Anything: Open-Source Framework for Seamless Image Insertion

Insert Anything is an open-source unified framework that can seamlessly insert elements such as people, objects, and clothing from reference images into target scenes, supporting various application scenarios

Step1X-Edit: Open Source AI Image Editing Framework

Step1X-Edit is a powerful open-source image editing framework that supports image editing via natural language instructions, offering capabilities similar to GPT-4o and Gemini2 Flash

Flex.2-preview: Open Source AI Image Generation Model Released

Flex.2-preview is an open-source text-to-image diffusion model with universal control and built-in inpainting capabilities, providing creators with more powerful image generation tools

Sand AI Releases MAGI-1: Autoregressive Video Generation at Scale

Sand AI has open-sourced MAGI-1, an autoregressive model that generates videos chunk by chunk, offering 24B and 4.5B parameter versions and supporting multiple video generation modes

SkyReels-V2 Released: Open-Source Model Supporting Infinite-Length Video Generation

SkyworkAI releases new open-source video generation model SkyReels-V2, supporting infinite-length videos with both text-to-video and image-to-video capabilities

Nari Labs Releases Dia 1.6B Text-to-Dialogue Speech Model

Nari Labs introduces open-source text-to-speech model Dia 1.6B, capable of generating multi-character dialogues directly from text with emotion and non-verbal communication

Kunlun Wanwei Releases SkyReels-V2 Infinite-Length Film Generative Model

Kunlun Wanwei's SkyReels team releases and open-sources SkyReels-V2, the world's first infinite-length film generative model using Diffusion Forcing framework, capable of producing high-quality, long-duration video content

Shakker Labs Releases FLUX.1-dev-ControlNet-Union-Pro-2.0

Shakker Labs launches the new FLUX.1-dev-ControlNet-Union-Pro-2.0 model with optimized control effects, support for multiple control modes, and smaller model size

Tencent Hunyuan and InstantX Team Release InstantCharacter Open Source Project

InstantCharacter is an innovative tuning-free method that enables character-consistent generation from a single image, supporting various downstream tasks

FramePack: Efficient Next-Frame Prediction Model for Video Generation

Developed by Lvmin Zhang, FramePack technology compresses input frame context, making video generation workload invariant to video length, allowing processing of numerous frames even on laptop GPUs

ByteDance Releases Seaweed-7B: A Cost-Effective Video Generation Foundation Model

Seaweed-7B achieves performance surpassing 14B parameter models with only 7 billion parameters, at just one-third the training cost of industry standards, bringing new possibilities to the video generation field

FloED: Open Source Efficient Video Inpainting with Optical Flow-Guided Diffusion

A new video inpainting framework FloED has released its code and weights, achieving higher video coherence and computational efficiency through optical flow guidance

PixelFlow: Generative Models Working Directly in Pixel Space

PixelFlow innovatively operates in raw pixel space, simplifying the image generation process without requiring pre-trained variational autoencoders, enabling end-to-end trainable models

VAST-AI Releases HoloPart: Generative 3D Part Amodal Segmentation Technology

HoloPart can decompose 3D models into complete, semantically meaningful parts, solving editing challenges in 3D content creation

VAST-AI and Tsinghua University Open Source UniRig: A Framework for Automatic Skeleton Rigging of All 3D Models

UniRig uses autoregressive models to generate high-quality skeleton structures and skinning weights for diverse 3D models, greatly simplifying the animation workflow

OmniSVG: Fudan University and StepFun Launch Unified Vector Graphics Generation Model

OmniSVG is a new unified multimodal SVG generation model capable of producing highly complex, editable vector graphics from various inputs including text, images, or character references

TTT-Video: Technology for Long Video Generation

Researchers develop TTT-Video model using Test-Time Training technology based on CogVideoX 5B, capable of generating coherent videos up to 63 seconds long

ByteDance Releases UNO: Extending Generation Capabilities from Less to More

ByteDance Creative Intelligence team releases UNO model, unlocking greater controllability through in-context generation, achieving high-quality image generation from single to multiple subjects

EasyControl: A New Framework for Efficient and Flexible Control of Diffusion Transformer

Tiamat AI team releases EasyControl framework, adding conditional control capabilities to DiT models, now supported in ComfyUI via the ComfyUI-easycontrol plugin

HiDream-I1 Open Source Release - Next Generation Image Generation Model

HiDream.ai releases HiDream-I1, a new open-source text-to-image model with 17B parameters that outperforms existing open-source models in multiple benchmarks, supporting high-quality image generation in various styles

Hi3DGen: A New Framework for High-Fidelity 3D Geometry Generation through Normal Bridging

Stable-X team introduces Hi3DGen, an innovative framework for generating high-fidelity 3D models from images, addressing the lack of geometric details in existing methods through normal bridging technology

VAST AI Research Open Sources TripoSF: Redefining New Heights in 3D Generation Technology

TripoSF, based on the innovative SparseFlex representation, supports 3D model generation at resolutions up to 1024³, capable of handling open surfaces and complex internal structures, significantly improving 3D asset quality

Kunlun Wanwei Open-Sources SkyReels-A2: Commercial-Grade Video Generation Framework

Kunlun Wanwei releases the world's first commercial-grade controllable video generation framework SkyReels-A2, enabling multi-element video generation through dual-branch architecture, bringing new possibilities for e-commerce, film production and more

Alibaba's Tongyi Lab Releases VACE: Video Creation and Editing Enters Unified Era

Alibaba Group's Tongyi Lab introduces VACE, the world's first unified framework for diverse video tasks, covering text-to-video generation, video editing, and complex task combinations

StarVector: A Multimodal Model for SVG Code Generation

The StarVector project implements automatic generation of SVG vector graphics code from images and text, providing new creative tools for designers and developers.

ByteDance Releases InfiniteYou: Flexible Photo Recrafting While Preserving User Identity

ByteDance introduces InfiniteYou (InfU), an innovative framework based on Diffusion Transformers that enables flexible photo recrafting while preserving user identity, addressing limitations in existing methods regarding identity similarity, text-image alignment, and generation quality

Stability AI Releases Stable Virtual Camera: Technology to Transform 2D Photos into 3D Videos

Stability AI launches new AI model Stable Virtual Camera, capable of converting ordinary photos into 3D videos with authentic depth and perspective effects, providing creators with intuitive camera control

StdGEN: Semantic-Decomposed 3D Character Generation from Single Images

Tsinghua University and Tencent AI Lab jointly introduce StdGEN, an innovative pipeline that generates high-quality semantically-decomposed 3D characters from single images, enabling separation of body, clothing, and hair

Tencent Releases Hunyuan3D 2.0 - Innovative 3D Asset Generation System

Tencent launches Hunyuan3D 2.0 system with a two-stage process for generating high-quality 3D models, featuring multiple open-source model series that support creating high-resolution 3D assets from text and images

Kuaishou Launches ReCamMaster Monocular Video Reframing Technology

Kuaishou Technology has launched ReCamMaster, a generative video technology that allows users to create new camera perspectives and motion paths from a single video.

Open-Sora 2.0 Released: Commercial-Grade Video Generation at Low Cost

LuChen Technology releases Open-Sora 2.0 open-source video generation model, achieving performance close to top commercial models with just $200,000 in training costs

Ali Tongyi Lab Releases VACE: All-in-One Video Creation and Editing Model

Ali Tongyi Lab launches multifunctional video creation and editing model VACE, integrating various video processing tasks into a single framework to lower the barrier of video creation

Microsoft Releases ART Multi-layer Transparent Image Generation Technology

Microsoft Research introduces intelligent layered generation based on global text prompts, supporting creation of transparent images with 50+ independent layers

Tencent Open-Sources HunyuanVideo-I2V Image-to-Video Model

Tencent's Hunyuan team releases open-source model for generating 5-second videos from single images, featuring smart motion generation and custom effects

Alibaba Open-Sources ViDoRAG Intelligent Document Analysis Tool

Alibaba introduces a document analysis system capable of understanding both text and images, improving processing efficiency for complex documents by over 10%

THUDM Open-Sources CogView4 - Native Chinese-Supported DiT Text-to-Image Model

THUDM releases CogView4 open-source image generation model with native Chinese support, leading in multiple benchmark tests

Sesame Unveils CSM Voice Model for Natural Conversations

Sesame Research introduces dual-Transformer conversational voice model CSM, achieving human-like interaction with open-source core architecture

Alibaba's Wan2.1 Video Generation Model Officially Open-Sourced

Alibaba has officially open-sourced its latest video generation model, Wan2.1, which can run with only 8GB of video memory, supporting high-definition video generation, dynamic subtitles, and multi-language dubbing, surpassing models like Sora with a total score of 86.22% on the VBench leaderboard

Alibaba Releases ComfyUI Copilot: AI-Driven Intelligent Workflow Assistant

Alibaba International Digital Commerce Group (AIDC-AI) releases the ComfyUI Copilot plugin, simplifying the user experience of ComfyUI through natural language interaction and AI-driven functionality, supporting Chinese interaction, and offering intelligent node recommendations and other features

Alibaba's WanX 2.1 Video Generation Model to be Open-Sourced

Alibaba has announced that its latest video generation model, WanX 2.1, will be open-sourced in the second quarter of 2025, supporting high-definition video generation, dynamic subtitles, and multi-language dubbing, ranking first on the VBench leaderboard with a total score of 84.7%

Google Releases PaliGemma 2 Mix: An Open-Source Visual Language Model Supporting Multiple Tasks

Google introduces the new PaliGemma 2 mix model, supporting various visual tasks including image description, OCR, object detection, and providing 3B, 10B, and 28B scale versions

Skywork Opensources SkyReels-V1: A Video Generation Model Focused on AI Short Drama Creation

Skywork has open-sourced its latest video generation model, SkyReels-V1, which supports text-to-video and image-to-video generation, featuring cinematic lighting effects and natural motion representation, and is now available for commercial use.

Light-A-Video - A Video Relighting Technology Without Training

Researchers have proposed a new video relighting method, Light-A-Video, which achieves temporally smooth video relighting effects through Consistent Light Attention (CLA) and Progressive Light Fusion (PLF).

StepFun releases Step-Video-T2V: A 300 Billion Parameter Text-to-Video Model

StepFun has released the open-source text-to-video model Step-Video-T2V, which has 300 billion parameters, supports the generation of high-quality videos up to 204 frames, and provides an online experience platform

Kuaishou Introduces CineMaster: Breakthrough in 3D-Aware Video Generation

Kuaishou officially releases CineMaster text-to-video generation framework, enabling high-quality video content creation through 3D-aware technology

Alibaba Open Sources InspireMusic: An Innovative Framework for Music, Song and Audio Generation

Alibaba's latest open-source project InspireMusic, a unified audio generation framework based on FunAudioLLM, supporting music creation, song generation and various audio synthesis tasks.

Alibaba Open Sources ACE++: Zero-Training Character-Consistent Image Generation

Alibaba Research Institute open sources image generation tool ACE++, supporting character-consistent image generation from single input through context-aware content filling technology, offering online experience and three specialized models.

ByteDance Releases OmniHuman: Next-Generation Human Animation Framework

ByteDance research team releases OmniHuman-1 human animation framework, capable of generating high-quality human video animations from a single image and motion signals.

DeepSeek Open-Sources Janus-Pro-7B: Multimodal AI Model

TurboDiffusion Releases Video Generation Acceleration Framework

Tsinghua University team open sources TurboDiffusion, a video generation acceleration framework that achieves 100-205x end-to-end diffusion generation acceleration on a single RTX 5090 while maintaining video quality

Tencent Releases Hunyuan3D 2.0: Open-Source High-Quality 3D Generation Model and End-to-End Creation Engine

Tencent releases Hunyuan3D 2.0, open-sourcing the complete DiT model and launching a one-stop 3D creation engine with innovative features including skeletal animation and sketch-to-3D, revolutionizing metaverse and game content creation

ComfyUI Project Two-Year Anniversary

ComfyUI celebrates its second birthday, evolving from a personal project into the world's most popular generative AI visual tool. Happy birthday, ComfyUI!

NVIDIA Open Sources Sana - An AI Model for Efficient 4K Image Generation

NVIDIA releases new Sana model capable of quickly generating images up to 4K resolution on consumer laptop GPUs, with ComfyUI integration support

ByteDance Open Sources LatentSync - High-Precision Lip Sync Technology Based on Diffusion Model

ByteDance releases open-source lip sync tool LatentSync, based on audio-conditioned latent space diffusion model, enabling precise lip synchronization for both real people and animated characters while solving frame jittering issues common in traditional methods

VMix: ByteDance Introduces Innovative Aesthetic Enhancement Technology for Text-to-Image Diffusion Models

ByteDance and University of Science and Technology of China jointly launch VMix adapter, enhancing AI-generated image aesthetics through cross-attention mixing control technology, seamlessly integrating with existing models without retraining

Tencent Open Sources StereoCrafter: One-Click 2D to 3D Video Conversion

StereoCrafter, jointly developed by Tencent AI Lab and ARC Lab, is now open source. It can convert any 2D video into high-quality stereoscopic 3D video, supporting various 3D display devices including Apple Vision Pro

LuminaBrush: AI Lighting Editor Released by ControlNet Creator

lllyasviel, creator of ControlNet and IC-Light, releases LuminaBrush - a new AI tool that enables precise lighting control through a two-stage framework with intuitive brush interactions

Genesis: Breakthrough Universal Physics Engine and Generative AI Platform Released

Genesis project releases a new universal physics engine and generative AI platform, integrating physics simulation, robot control and generative AI capabilities to provide comprehensive solutions for robotics and physical AI applications

Odyssey Releases Explorer - Breakthrough Generative World Model

Odyssey launches Explorer, the first generative world model that can convert 2D images into complete 3D worlds, supporting dynamic effects and mainstream creative tool editing, bringing revolutionary changes to film, gaming and other fields

Mining Virus Found in ComfyUI Impact-Pack Plugin - Urgent Action Required

Popular ComfyUI plugin Impact-Pack discovered to contain mining malware through Ultralytics package, affecting numerous users. This article details the virus situation and solutions.

Luma Launches Photon - A Revolutionary AI Image Generation Model with Exceptional Value

Luma introduces revolutionary Photon and Photon Flash image generation models, redefining AI creation with outstanding value and superior image quality

Tencent Open Sources HunyuanVideo - A New Era in Video Generation

Tencent officially open sources HunyuanVideo, the industry's largest video generation model with 13 billion parameters, achieving leading performance in video quality and motion stability

Stability AI Releases Stable Diffusion 3.5 Large ControlNet Models

Stability AI launches three new ControlNet models for Stable Diffusion 3.5 Large, including Blur, Canny, and Depth, providing more precise control capabilities for image generation

NVIDIA Releases Edify 3D - A Revolutionary 3D Asset Generation Technology

NVIDIA has launched the new Edify 3D technology, capable of generating high-quality 3D assets in just two minutes, including detailed geometry, clear topology, high-resolution textures, and PBR materials.

Lightricks Releases Real-Time Video Generation Model LTX-Video

Lightricks launches the LTX-Video real-time video generation model based on DiT, supporting real-time generation of high-quality videos, and has been open-sourced on GitHub and Hugging Face.

InstantX Releases FLUX.1-dev IP-Adapter Model

InstantX team has released the IP-Adapter model based on FLUX.1-dev, bringing more powerful image reference capabilities to the FLUX model

FLUX Official Tools Suite Released

Black Forest Labs releases a variety of FLUX official tools, including local redraw, ControlNet, and image style conversion

IC-Light V2 Release: Enhanced Image Editing Capabilities

IC-Light V2 is a Flux-based image editing model that supports various stylized image processing, including oil paintings and anime styles. This article details the new features and applications of IC-Light V2.

Stable Diffusion 3.5 Launches: A New Era in AI Image Generation

Stability AI releases Stable Diffusion 3.5, offering multiple powerful model variants, supporting commercial use, and leading the market in image quality and prompt adherence.

ComfyUI V1 Released: Cross-Platform Desktop App with One-Click Installation

ComfyUI has released Version 1, offering a cross-platform desktop application with one-click installation, a revamped user interface, and numerous feature improvements, significantly enhancing the user experience.

Kuaishou and PKU Jointly Release Pyramidal Flow Matching Video Generation Model

Kuaishou Technology and Peking University jointly developed the Pyramidal Flow Matching model, an autoregressive video generation technology based on flow matching, capable of producing high-quality, long-duration video content.

Jasperai Releases Flux.1-dev ControlNet Model Series

Jasperai introduces a series of ControlNet models for Flux.1-dev, including surface normals, depth maps, and super-resolution models, providing more precise control for AI image generation.

ComfyUI-PuLID-Flux: Implementing PuLID-Flux in ComfyUI

ComfyUI-PuLID-Flux is an open-source project that integrates PuLID-Flux into ComfyUI, offering powerful image generation and editing capabilities.

Meta Introduces Movie Gen: AI Models for Video and Audio Generation

Meta introduces the Movie Gen series of models, including video generation, audio generation, and personalized video editing capabilities, opening new frontiers in AI content creation.

ostris Releases OpenFLUX.1: A Commercially Usable De-distilled Version of FLUX.1-schnell

ostris has released OpenFLUX.1, a de-distilled version of the FLUX.1-schnell model that can be fine-tuned, uses normal CFG values, and retains commercial licensing.

Black Forest Lab Launches FLUX 1.1 [Pro]

Black Forest Labs introduces FLUX1.1 [pro], a generative AI model featuring ultra-fast generation, superior image quality, and 2K ultra-high resolution generation, opening unprecedented opportunities for creators, developers, and businesses.

THUDM Open Sources New Image Generation Models: CogView3 and CogView-3Plus

THUDM has open-sourced their latest image generation models CogView3 and CogView-3Plus-3B, showcasing exceptional performance and efficiency. CogView3 utilizes cascaded diffusion technology, while CogView-3Plus-3B is a lightweight model based on the DiT architecture, bringing significant breakthroughs to the field of text-to-image generation.