Hi3DGen: A New Framework for High-Fidelity 3D Geometry Generation through Normal Bridging

The Stable-X research team has recently released a breakthrough 3D generation technology—Hi3DGen, an innovative framework that generates high-fidelity 3D geometric models from single images through normal bridging technology. Compared to existing methods, Hi3DGen can generate richer and more precise geometric details, becoming the new SOTA method in the image-to-3D generation field.

Online Experience

You can experience the powerful capabilities of Hi3DGen through the following interactive interface:

Why Hi3DGen?

Despite significant progress in 3D model generation from 2D images in recent years, existing methods still face severe challenges in generating fine geometric details, mainly limited by the following factors:

- Scarcity of high-quality 3D training data: Limiting the model’s ability to learn detailed geometric features

- Domain gap between training and testing: Large style differences between synthetic rendered images and real application scenarios

- Interference from lighting, shadows, and textures: These elements in RGB images complicate the extraction of geometric information

These limitations make it difficult for existing methods to accurately reproduce fine-grained geometric structures from input images, affecting the realism and practicality of generated models.

Technical Innovations of Hi3DGen

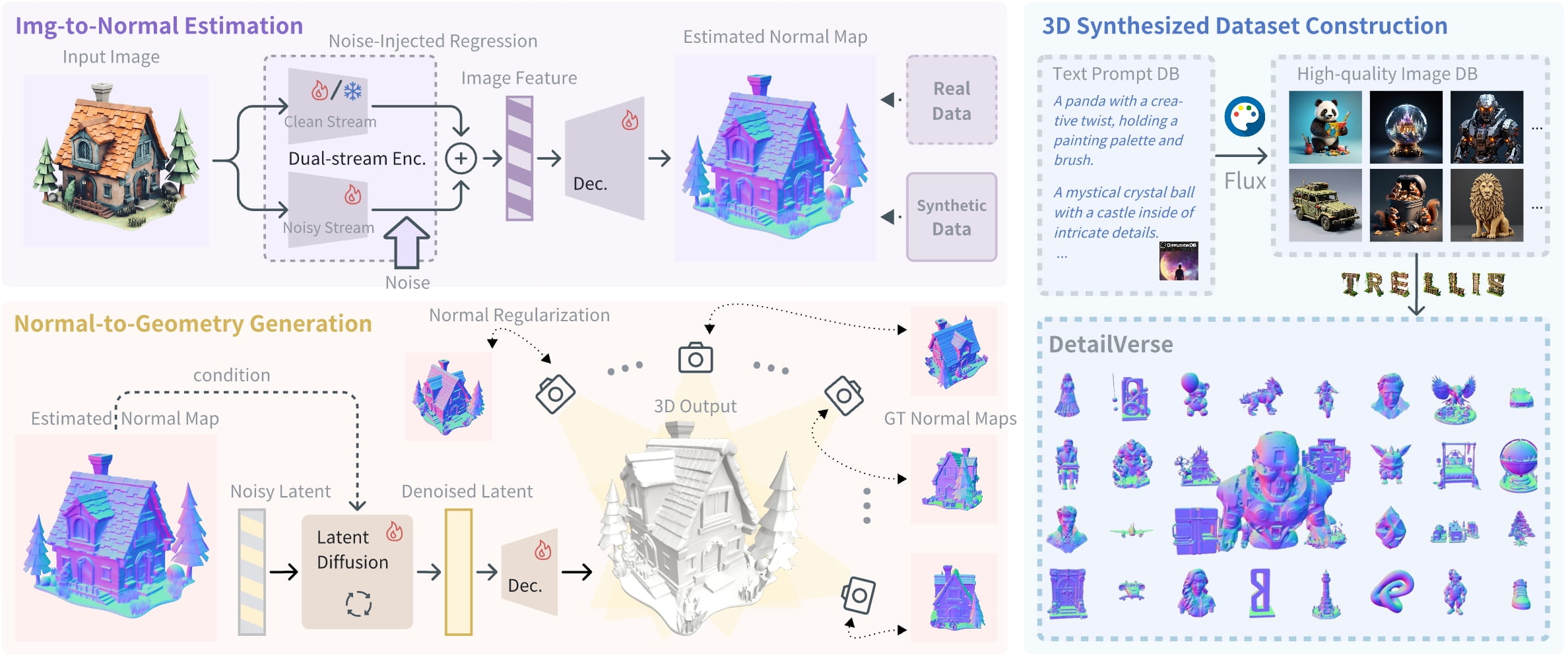

Hi3DGen solves the above problems through a novel technical approach, with its innovative framework consisting of three key components:

- Image-to-normal estimator: Decouples low-frequency image patterns through noise injection and dual-stream training, achieving generalizable, stable, and clear normal estimation

- Normal-to-geometry generator: Employs normal-regularized latent diffusion learning to enhance the fidelity of 3D geometry generation

- Synthetic dataset construction pipeline: Specially designed high-quality 3D dataset DetailVerse, containing rich geometric details to support model training

This “bridging” architecture cleverly divides image geometry generation into two stages, effectively circumventing the difficulty of direct mapping from RGB to 3D geometry by using 2.5D normal maps as an intermediate representation.

Performance Evaluation

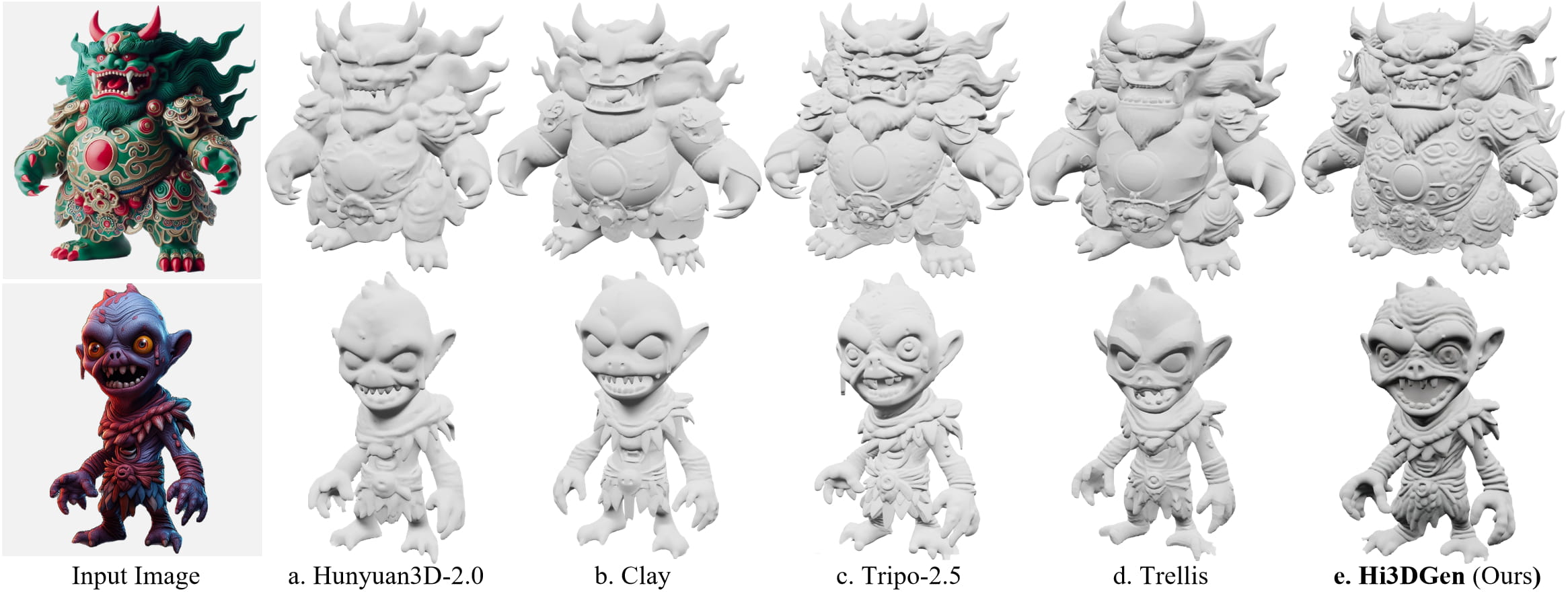

Through comparative experiments with multiple state-of-the-art image-to-3D generation methods (including CraftsMan-1.5, Hunyuan 3D-2.0, Clay, Tripo-2.5, Trellis, and Dora), Hi3DGen demonstrates significant advantages in fidelity and detail reproduction:

- More accurate shape contours and proportions

- Richer surface textures and geometric details

- Fewer model defects and holes

- Higher consistency with input images

An evaluation involving 50 regular users and 10 professional 3D artists showed that Hi3DGen’s generation quality received the highest ratings, proving its excellence in both amateur and professional application scenarios.

Application Scenarios

Hi3DGen’s technological breakthrough brings new possibilities to multiple fields:

- Games and film production: Rapidly create high-quality 3D assets, reducing production costs

- Virtual and augmented reality: Generate more realistic virtual objects

- E-commerce: Create accurate 3D models of products, enhancing online shopping experiences

- 3D printing: Generate high-precision 3D models directly from photos

- Cultural heritage preservation: Reconstruct 3D forms of precious artifacts from historical images

Quick Start Guide

- Visit the Hi3DGen online demo

- Upload single or multiple test images

- Click the “Generate Shape” button and wait for generation to complete

- Use the “Export Mesh” function to export 3D assets in different formats

Open Source Plan

It’s worth noting that the complete code for Hi3DGen will be officially open-sourced on April 10, 2025, when researchers and developers can access the full implementation details and training scripts.

Related Resources

- Project Homepage

- Online Demo

- GitHub Repository (will be available on April 10, 2025)

- Research Paper

The release of Hi3DGen marks an important milestone in image-to-3D generation technology, opening up new possibilities for high-fidelity 3D content creation through the innovative normal bridging method. As the complete code is about to be open-sourced, we look forward to seeing more innovative applications and further technological developments based on this technology.