ByteDance Releases UNO: Extending Generation Capabilities from Less to More

ByteDance Creative Intelligence team recently released a universal image generation framework called UNO, which is built on the core concept of “Less-to-More Generalization” and unlocks greater controllability through in-context generation, achieving high-quality image generation from single to multiple subjects.

The UNO framework provides content creators with more flexible and powerful tools, enabling precise control in complex scenes while maintaining the characteristics of multiple objects, making AI-generated images more aligned with creators’ intentions.

Key Challenges Addressed

Traditional AI image generation models face two major challenges when handling scenes with multiple specific objects:

- Data Scalability Issue: Expanding from single-subject datasets to multi-subject datasets is particularly difficult, as high-quality, multi-angle, subject-consistent paired data is hard to acquire at scale

- Subject Extensibility Issue: Existing methods mainly focus on single-subject generation and struggle to adapt to the complex requirements of multi-subject scenarios

UNO resolves these issues through unique approaches, achieving more consistent and controllable image generation results.

Technical Principles Explained

UNO framework introduces two key technical innovations:

-

Progressive Cross-modal Alignment: A two-stage training strategy

- First Stage: Fine-tuning a pretrained T2I model using in-context generated single-subject data, transforming it into an S2I (Subject-to-Image) model

- Second Stage: Further training with generated multi-subject data pairs to enhance the model’s capability in handling complex scenes

-

Universal Rotary Position Embedding (UnoPE): A special position encoding technique that effectively resolves attribute confusion issues when extending visual subject control, enabling the model to accurately distinguish and maintain the characteristics of multiple subjects

High-Consistency Data Synthesis Pipeline

One of UNO’s major innovations is its high-consistency data synthesis pipeline:

- Leveraging the Inherent In-Context Generation Capabilities of Diffusion Transformers: Fully utilizing the potential of current diffusion models

- Generating High-Consistency Multi-Subject Paired Data: Ensuring training data quality and consistency through specific data generation strategies

- Iterative Training Process: Progressively evolving from text-to-image models to complex models handling multiple image conditions

This approach not only solves the challenge of data acquisition but also improves the quality and consistency of generation results.

Practical Application Scenarios

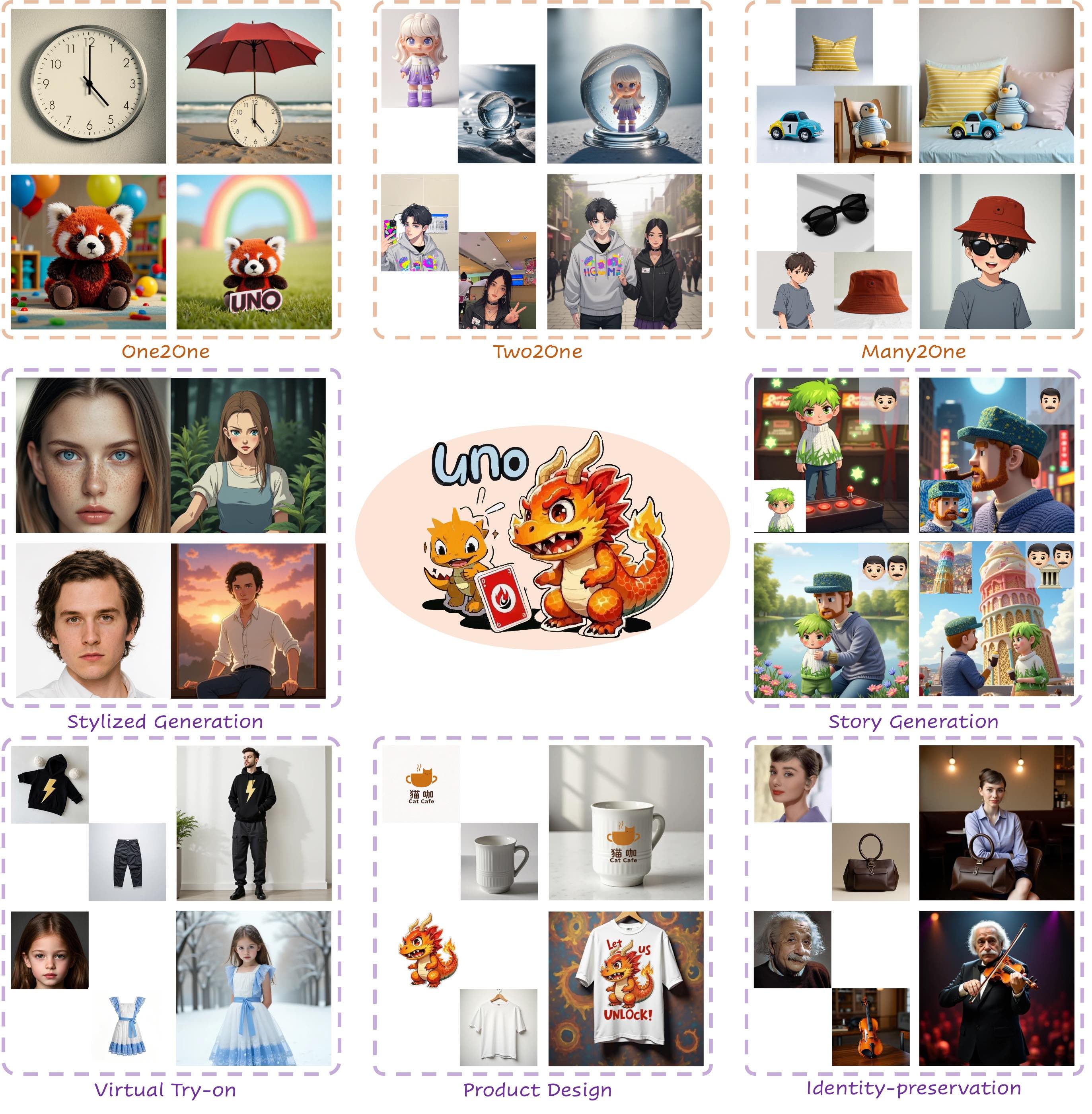

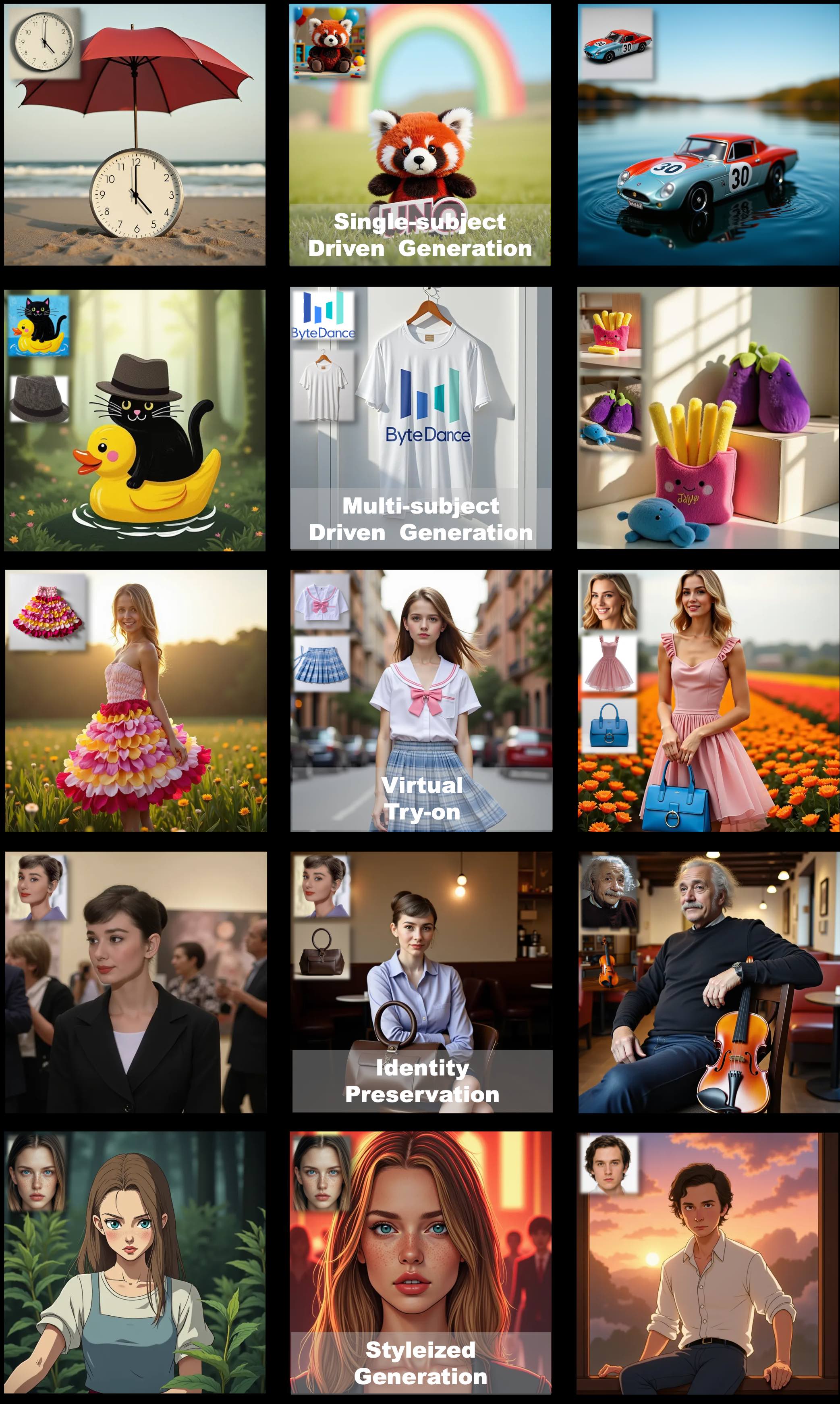

The UNO model demonstrates diverse practical capabilities, applicable to:

- Multi-Subject Customized Generation: Placing multiple specific objects in the same scene while maintaining their individual characteristics

- Virtual Try-On and Product Display: Showcasing specific products or services in different environments

- Brand Customized Content: Integrating brand elements into various scenes while maintaining brand consistency

- Creative Design and Content Production: Providing designers and content creators with richer creative possibilities

Generalization Capabilities

The UNO model demonstrates powerful generalization capabilities, unifying various tasks:

- Single-Subject to Multi-Subject Transfer: Expanding from simple scenes to complex scenarios

- Adaptation to Different Styles: Adapting to different style requirements while maintaining subject characteristics

- Integration of Multiple Creative Tasks: One model handling tasks that previously required multiple specialized models

Online Experience

You can personally experience UNO’s powerful capabilities through the following interface:

Technical Parameters and Open Source Information

The UNO model is developed based on FLUX.1 and open-sourced by the ByteDance team, including training code, inference code, and model weights.

The project is available on GitHub, allowing researchers and developers to freely access and use the technology. The project code follows the Apache 2.0 license, while the model weights are released under the CC BY-NC 4.0 license. It’s worth noting that any models related to the FLUX.1-dev base model must comply with the original license terms.