DeepSeek Open-Sources Janus-Pro-7B: Multimodal AI Model

Chinese AI company DeepSeek announced the open-sourcing of its next-generation multimodal model, Janus-Pro-7B, in the early hours of today. The model surpasses OpenAI’s DALL-E 3 and Stable Diffusion 3 in tasks such as image generation and visual question answering, and has caused a sensation in the AI community with its “understanding-generation dual-path” architecture and minimalist deployment solution. View official announcement

Performance: Small Model Outperforms Industry Giants

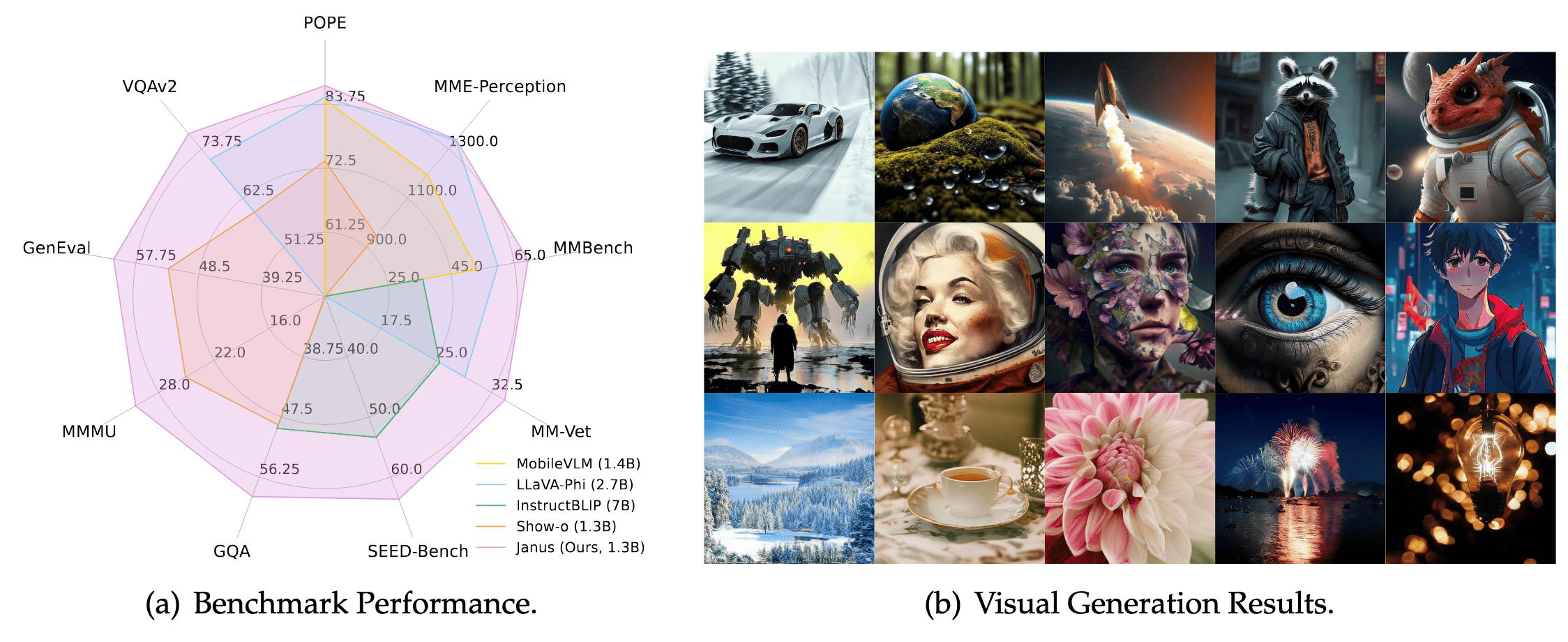

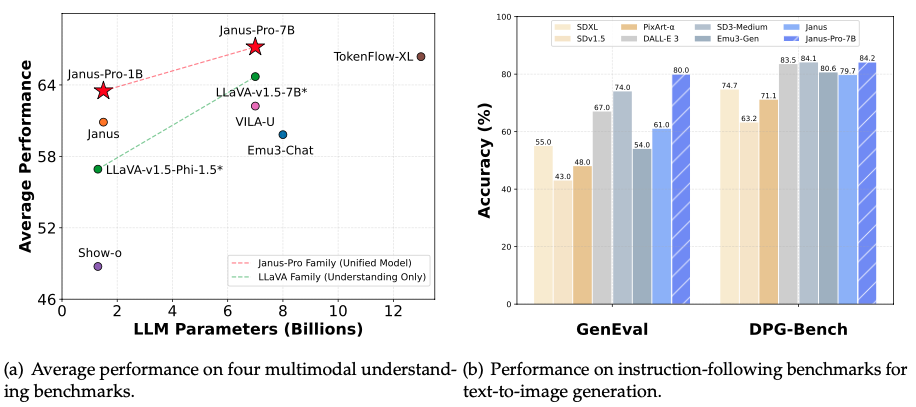

Despite having only 7 billion parameters (approximately 1/25th of GPT-4), Janus-Pro-7B outperforms its competitors in key tests:

- Text-to-Image Quality: Achieves 80% accuracy in the GenEval test, beating DALL-E 3 (67%) and Stable Diffusion 3 (74%)

- Complex Instruction Understanding: Scores 84.19% accuracy in the DPG-Bench test, accurately generating complex scenes such as “a snow-capped mountain with a blue lake at its base”

- Multimodal Question Answering: Visual question answering accuracy surpasses GPT-4V, with an MMBench test score of 79.2, close to professional analysis models

Technical Breakthrough: Dual-Path Collaboration Like “Janus”

Traditional models use the same visual encoder for both understanding and generating images, akin to asking a chef to design a menu and cook at the same time. Janus-Pro-7B innovatively splits visual processing into two independent paths:

- Understanding Path: Uses the SigLIP-L visual encoder to quickly extract core information from images (e.g., “This is an orange cat on a sofa”)

- Generation Path: Decomposes images into pixel arrays via a VQ tokenizer, gradually drawing details like assembling Lego blocks (e.g., fur texture, lighting effects) This “divide and conquer” design resolves the role conflict in traditional models and enhances generation stability by training with a mix of 72 million synthetic images and real data.

Open Source and Commercial Use

- Free for Commercial Use: Released under the MIT license, allowing unlimited commercial use

- Minimalist Deployment: Offers 1.5B (requires 16GB VRAM) and 7B (requires 24GB VRAM) versions, runnable on standard GPUs

- One-Click Generation: Official Gradio interface provided; input

generate_image(prompt="snow-capped mountain at sunset", num_images=4)to batch-generate images

Official Resources:

- GitHub Repository: https://github.com/deepseek-ai/Janus

- Model Download: HuggingFace Janus-Pro-7B

Application Scenarios: From Art to Privacy Protection

- Creative Industries: Designers input text to generate poster prototypes; game developers quickly build scene assets

- Educational Tools: Teachers use the model to generate dynamic illustrations of volcanic eruptions for geography lessons

- Enterprise Privacy: Hospitals and banks can deploy locally, avoiding the need to upload patient records or financial data to the cloud

- Cultural Dissemination: Recognizes global landmarks (e.g., Hangzhou’s West Lake) and generates images with cultural symbols

DeepSeek Janus Official Resources**

- Code Repository: GitHub Janus-Pro-7B

- Model Download: HuggingFace Model Page