ByteDance Releases Sa2VA: First Unified Image-Video Understanding Model

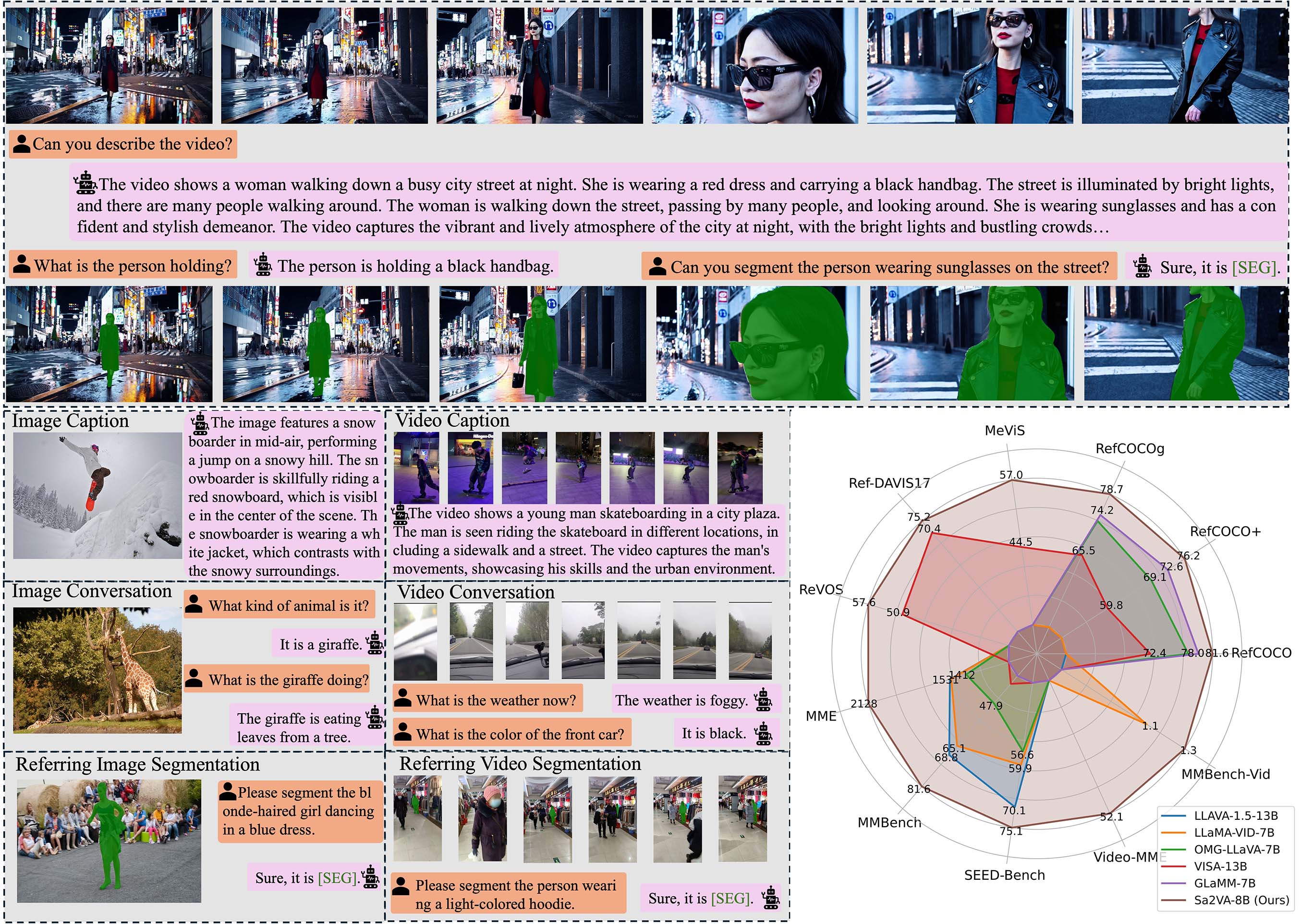

Today, ByteDance released the Sa2VA (SAM2 + LLaVA) multimodal model on the Hugging Face platform. This is the first dense segmentation understanding model capable of processing both images and videos simultaneously. Sa2VA combines Meta’s SAM2 segmentation technology with LLaVA’s visual question-answering capabilities, adding visual prompt understanding and dense object segmentation functionality while maintaining question-answering performance comparable to state-of-the-art multimodal models.

Technical Features: A New Breakthrough in Multimodal Understanding

Sa2VA’s core innovation lies in organically integrating two advanced technologies:

1. Visual Segmentation Capabilities

- Dense Object Segmentation: Capable of accurately identifying and segmenting multiple objects in images and videos

- Visual Prompt Understanding: Supports interactive segmentation through visual cues such as masks

- Cross-Frame Consistency: Maintains temporal continuity of object segmentation in video processing

2. Multimodal Question Answering

- Image Understanding: Provides detailed image descriptions and analysis

- Video Analysis: Understands temporal dynamic changes in video content

- Interactive Dialogue: Supports multi-turn conversations based on visual content

Model Series: Multiple Specifications to Meet Different Needs

ByteDance has built a complete Sa2VA model family based on the Qwen2.5-VL and InternVL series:

| Model Name | Base Model | Language Model | Parameter Scale |

|---|---|---|---|

| Sa2VA-InternVL3-2B | InternVL3-2B | Qwen2.5-1.5B | 2B |

| Sa2VA-InternVL3-8B | InternVL3-8B | Qwen2.5-7B | 8B |

| Sa2VA-InternVL3-14B | InternVL3-14B | Qwen2.5-14B | 14B |

| Sa2VA-Qwen2_5-VL-3B | Qwen2.5-VL-3B | Qwen2.5-3B | 3B |

| Sa2VA-Qwen2_5-VL-7B | Qwen2.5-VL-7B | Qwen2.5-7B | 7B |

Performance: Leading Results in Multiple Benchmarks

Sa2VA demonstrates excellent performance in multiple standard tests:

Visual Question Answering Capabilities

- MME Test: Sa2VA-InternVL3-14B achieved 1746/724 points

- MMBench: 84.3 points, approaching professional visual understanding model levels

Segmentation Task Performance

- RefCOCO Series: Performed excellently in referring expression segmentation tasks

- Video Segmentation: Achieved top performance in MeVIS and DAVIS benchmark tests

Application Scenarios: Extensive Practical Value

Sa2VA’s unified architecture brings new possibilities to multiple domains:

1. Content Creation

- Video Editing: Automatically identifies and segments objects in videos, simplifying post-production processes

- Image Annotation: Provides precise object segmentation and descriptions for large-scale image datasets

2. Education and Training

- Interactive Teaching: Helps students understand complex concepts through visual prompts and question answering

- Content Analysis: Automatically analyzes key information points in teaching videos

3. Security and Surveillance

- Intelligent Analysis: Real-time analysis of personnel and object behavior in surveillance videos

- Anomaly Detection: Identifies abnormal situations by combining visual understanding and segmentation capabilities

4. Medical Imaging

- Assisted Diagnosis: Analyzes medical images and provides detailed regional descriptions

- Lesion Localization: Precisely segments and annotates regions of interest

Open-Source Resources and Access

Sa2VA adopts an open-source release strategy, providing convenience for researchers and developers:

Official Resource Links:

- Project Homepage: GitHub Sa2VA

- Paper: arXiv:2501.04001

- Model Download: Hugging Face Sa2VA Series

The release of Sa2VA marks the evolution of multimodal AI toward a more unified and practical direction. Its design approach of deeply integrating visual segmentation with language understanding opens new possibilities for future AI applications.