title: “FlexiAct: Flexible Action Control in Heterogeneous Scenarios” description: “FlexiAct, developed jointly by Tsinghua University and Tencent ARC Lab, can transfer actions from a reference video to any target image while maintaining identity consistency” tag: AI, video-generation, action-control, image-to-video date: 2025-05-08

FlexiAct: Flexible Action Control in Heterogeneous Scenarios

A research team from Tsinghua University and Tencent ARC Lab has recently released FlexiAct, a new technology that can transfer actions from a reference video to any target image, maintaining good results even when layout, viewpoint, and skeletal structure differ. This technology has been accepted by SIGGRAPH 2025.

Technical Background

Action customization refers to generating videos where the subject performs actions dictated by input control signals. Current methods primarily use pose-guided or global motion customization, but are strictly constrained by spatial structure (such as layout, skeleton, and viewpoint consistency), making it difficult to adapt to different subjects and scenarios.

Technical Innovation

FlexiAct overcomes the limitations of existing technologies to achieve:

- Precise action control

- Spatial structure adaptation

- Identity consistency preservation

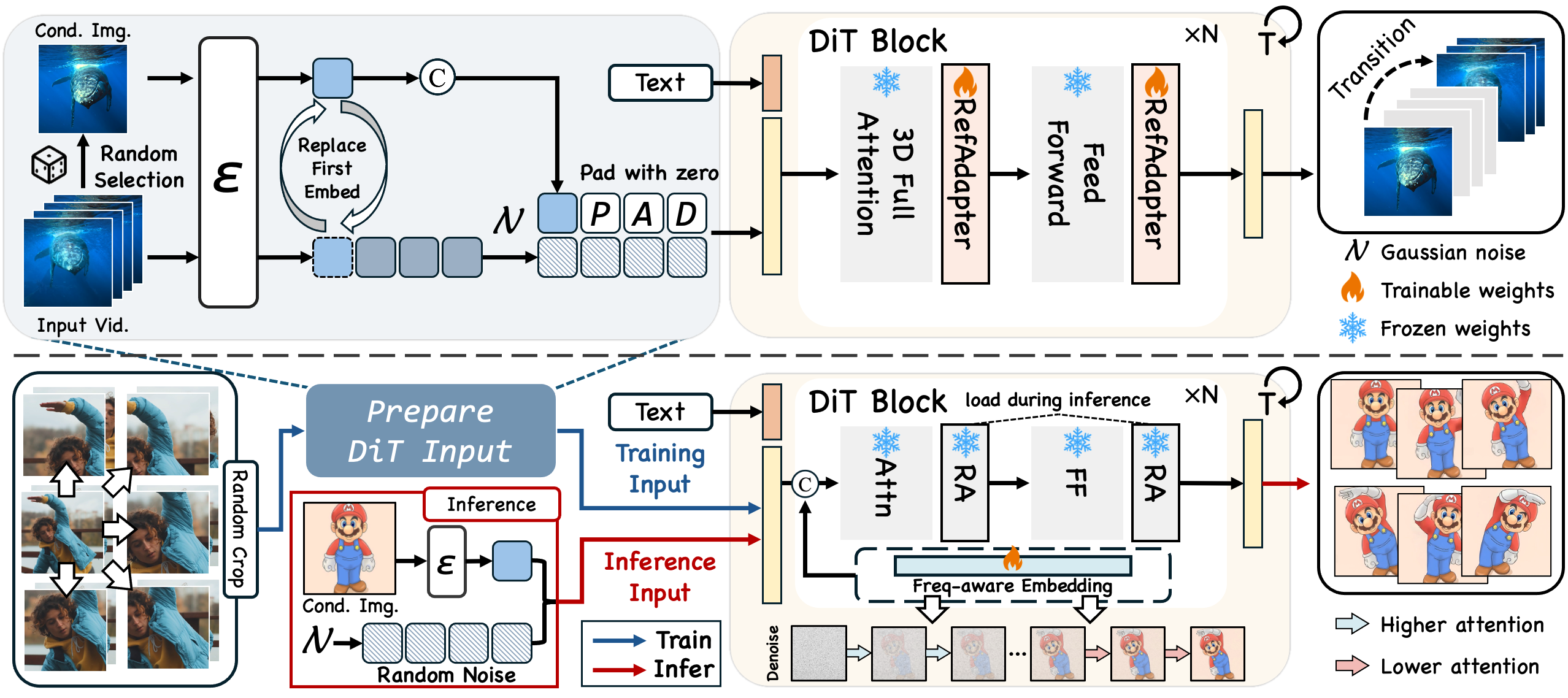

The technology is built around two key components:

-

RefAdapter: A lightweight image-conditioned adapter that excels in spatial adaptation and consistency preservation, balancing appearance consistency and structural flexibility.

-

FAE (Frequency-aware Action Extraction): Based on the research team’s observations, the denoising process exhibits varying levels of attention to motion (low frequency) and appearance details (high frequency) at different timesteps. FAE achieves action extraction directly during the denoising process, without relying on separate spatial-temporal architectures.

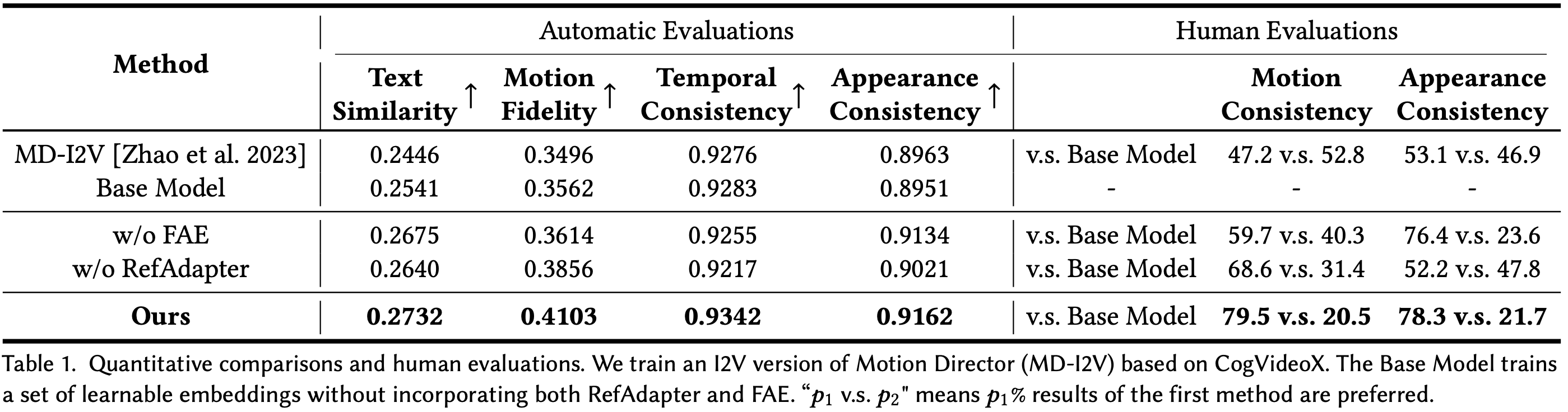

Compared to existing methods, FlexiAct demonstrates significant performance advantages in heterogeneous scenarios:

Application Scenarios

FlexiAct can be widely applied in various scenarios:

- Human Action Transfer: Transferring human actions to game characters or cartoon figures

- Animal Animation Generation: Adding dynamic actions to animal images

- Camera Dynamic Effects: Creating dynamic effects under different camera perspectives

- Cross-domain Action Migration: Implementing action migration between different species, such as applying human actions to animals

Data and Models

The research team built a dedicated dataset for this work, including various action types:

- Human Actions: Walking, crouching, jumping, etc.

- Animal Actions: Running, jumping, standing, etc.

- Camera Actions: Forward movement, rotation, zooming, etc.

FlexiAct is developed based on the CogVideoX-5B model, achieving high-quality action transfer effects.

Open Source Resources

The research team has open-sourced related resources, including:

- FlexiAct pre-trained models (based on CogVideoX-5B)

- Datasets for training and testing

- Code for training and inference

- Detailed instructions and examples

Future Plans

According to the project update log, the research team plans to:

- Release training and inference code

- Release FlexiAct checkpoints (based on CogVideoX-5B)

- Release training data

- Release Gradio demo