ComfyUI Basic Outpainting Workflow and Tutorial

Outpainting refers to the continuation of content in the edge area of the original image, thereby extending the size of the image. This technique allows us to:

- Expand the field of view of the image

- Supplement the missing parts of the picture

- Adjust the aspect ratio of the image

The principle of outpainting is based on the Stable Diffusion’s inpainting technology, which adds a blank area in the edge area of the image, and then uses the inpainting model to fill the blank area, thereby extending the image.

ComfyUI Outpainting Tutorial Related File Download

ComfyUI Workflow Files

After downloading, drag the workflow file into ComfyUI to use

Stable Diffusion Model Files

This workflow requires the use of two models, you can also use other models:

| Model Name | Use | Repository Address | Download Address |

|---|---|---|---|

v1-5-pruned-emaonly.safetensors | Generate Initial Image | Repository Address | Download Address |

sd-v1-5-inpainting.ckpt | Outpainting | Repository Address | Download Address |

After downloading, please place these two model files in the following directory:

Path-to-your-ComfyUI/models/checkpoints

If you want to learn more: How to Install Checkpoints Model If you want to find and use other models: Stable Diffusion Model Resources

ComfyUI Basic Outpainting Workflow Usage

Since the model installation location may be different, find the Load Checkpoint in the workflow, and then select the model file you downloaded from the model drop-down menu.

- Select

v1-5-pruned-emaonly.safetensorsfor the first node - Select

sd-v1-5-inpainting.ckptfor the second node

Because the second node is a model specifically used for outpainting, the effect of using the model for inpainting will be better, you can test different settings by yourself.

ComfyUI Basic Outpainting Workflow Simple Explanation

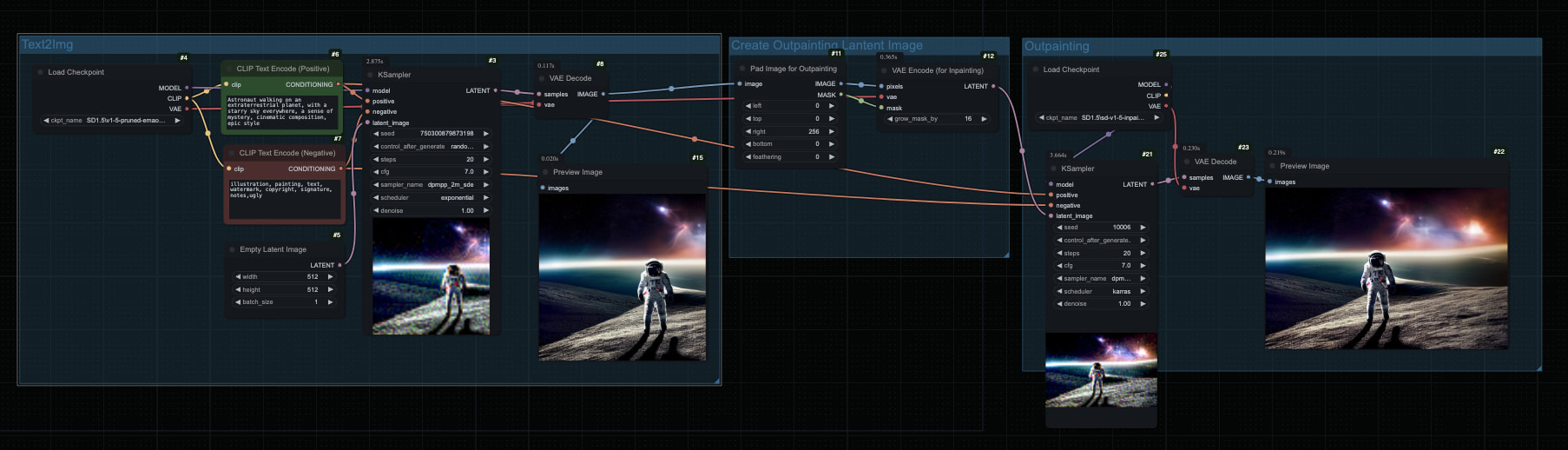

This workflow is divided into three main parts:

1. Text2Img Part

First, generate an initial image:

- Use

EmptyLatentImageto set a 512x512 canvas - Set positive and negative prompts through

CLIPTextEncode - Generate the initial image using

KSampler

2. Create Latent Image for Outpainting

- Use the

ImagePadForOutpaintnode to add a blank area around the original image - The parameters of the node determine in which direction the image will be extended

- At the same time, a corresponding mask will be generated for the subsequent outpainting

3. Outpainting Generation

- Use a dedicated inpainting model for outpainting

- Keep the same prompts as the original image to ensure a consistent style

- Generate the extended area using

KSampler

Instructions for Use

-

Adjust the extension area:

- Set the extension pixel values in the four directions in the

ImagePadForOutpaintnode - The number represents the number of pixels to be extended in that direction

- Set the extension pixel values in the four directions in the

-

Prompt setting:

- The positive prompt describes the scene and style you want

- The negative prompt helps to avoid unwanted elements

-

Model selection:

- Use a regular SD model in the first stage

- It is recommended to use a dedicated inpainting model for the outpainting stage

Notes on ComfyUI Basic Outpainting Workflow

-

When outpainting, try to maintain the coherence of the prompts, so that the extended area and the original image can be better integrated

-

If the outpainting effect is not ideal, you can:

- Adjust the sampling steps and CFG Scale

- Try different samplers

- Fine-tune the prompts

-

Recommended outpainting models:

- sd-v1-5-inpainting.ckpt

- Other dedicated inpainting models

Structure of ComfyUI Basic Outpainting Workflow

The workflow mainly includes the following nodes:

- CheckpointLoaderSimple: Load the model

- CLIPTextEncode: Process the prompts

- EmptyLatentImage: Create the canvas

- KSampler: Image generation

- ImagePadForOutpaint: Create the outpainting area

- VAEEncode/VAEDecode: Encode and decode the image