How to Use OpenPose ControlNet SD1.5 Model in ComfyUI

Introduction to SD1.5 OpenPose ControlNet

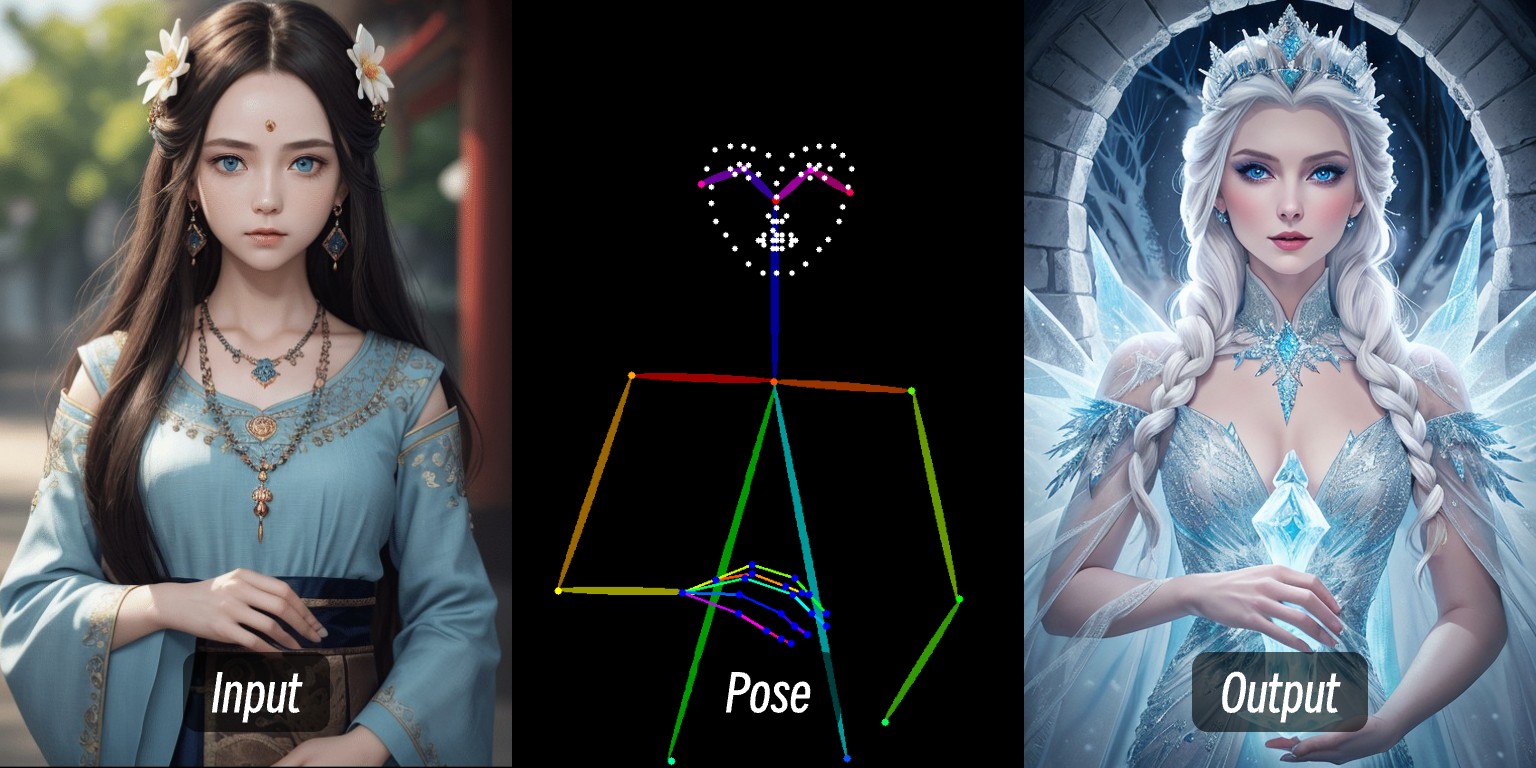

OpenPose ControlNet is a specialized ControlNet model designed to control human poses in images. By analyzing the poses of people in input images, it helps AI maintain correct poses when generating new images. This model works particularly well for generating human images, anime characters, and game characters because it can accurately understand and maintain human poses.

This tutorial focuses on using the OpenPose ControlNet model with SD1.5. Tutorials for other versions and types of ControlNet models will be added later.

Using OpenPose ControlNet

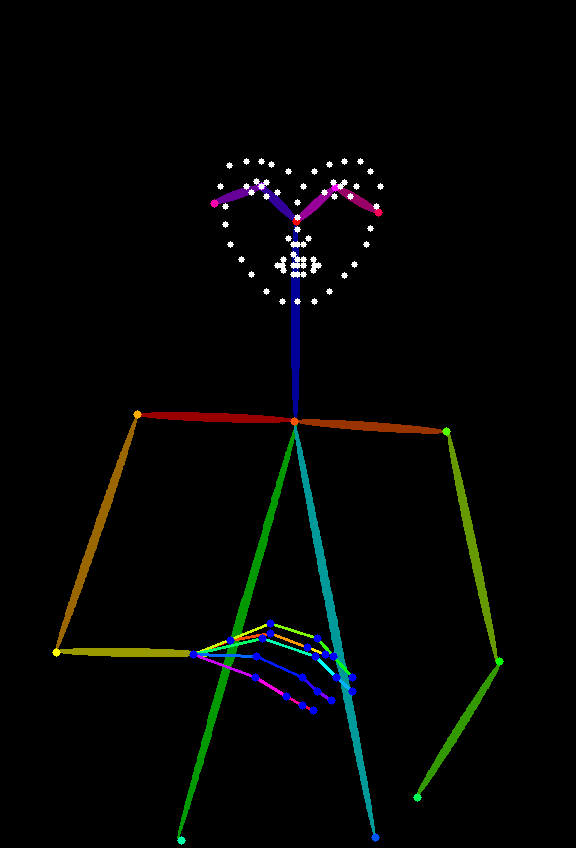

OpenPose ControlNet requires an OpenPose image to control human poses, then uses the OpenPose ControlNet model to control poses in the generated image. Here’s an example of an OpenPose image:

Like Depth images, you can use the ComfyUI ControlNet Auxiliary Preprocessors plugin to generate OpenPose images. If you don’t want to install this plugin, you can also use online tools like open-pose-editor to generate OpenPose images for pose control. However, we highly recommend installing the ComfyUI ControlNet Auxiliary Preprocessors plugin as it will be frequently used in daily operations.

Step by Step Tutorial for Using OpenPose ControlNet

1. Upgrade ComfyUI

Since this article uses the new Apply ControlNet node which differs from the old nodes, it’s recommended to upgrade or install the latest version of ComfyUI You can refer to these tutorials:

2. Install Required Plugins

Since ComfyUI Core doesn’t come with the corresponding OpenPose image preprocessor, you need to download the preprocessor plugin first This tutorial requires the ComfyUI ControlNet Auxiliary Preprocessors plugin to generate OpenPose images.

We recommend using ComfyUI Manager for installation For plugin installation instructions, refer to ComfyUI Plugin Installation Guide which provides detailed information

The latest version of ComfyUI Desktop comes with ComfyUI Manager plugin pre-installed

3. Download Required Models

First, you need to install these models:

| Model Type | Model File | Download Link |

|---|---|---|

| SD1.5 Base Model | dreamshaper_8.safetensors | Civitai |

| OpenPose ControlNet Model | control_v11f1p_sd15_openpose.pth(required) | Hugging Face |

4. Model Storage Location

Please place the model files according to this structure:

📁ComfyUI

├── 📁models

│ ├── 📁checkpoints

│ │ └── 📁SD1.5

│ │ └── dreamshaper_8.safetensors

│ ├── 📁controlnet

│ │ └── 📁SD1.5

│ │ └── control_v11f1p_sd15_openpose.pthSince SD versions and controlnet have version dependencies, I’ve added an SD1.5 folder layer here to make future model management easier

After installation, refresh or restart ComfyUI to let the program read the model files

5. Workflow Files

Here are two workflow files provided

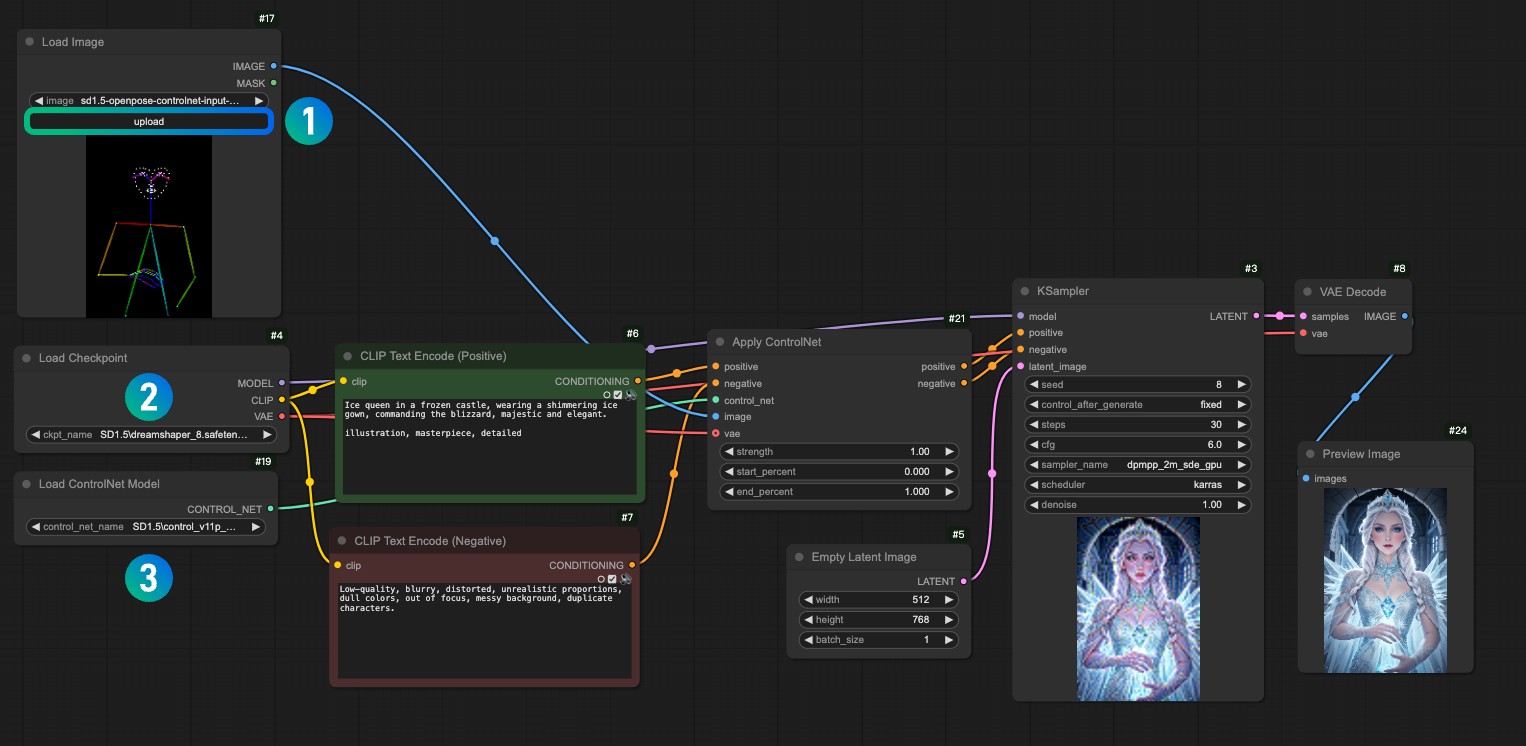

Using OpenPose Image and ControlNet Model for Image Generation

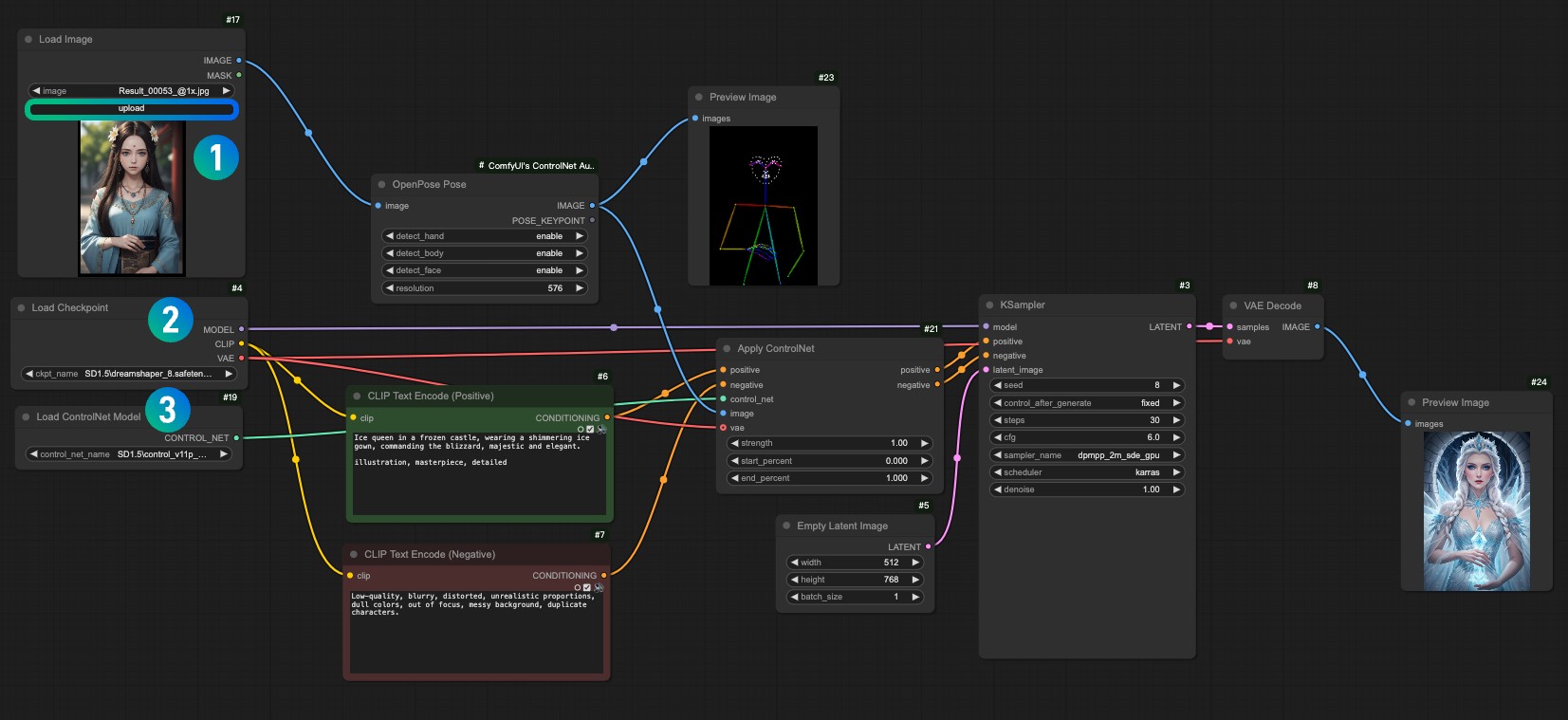

Download the workflow above, drag it into ComfyUI or use the shortcut Ctrl+O to open this workflow file

Please download the image below and load it in the Load Image node

- Load the reference image in the

1Load Image node - Select your installed model in the

2Load Checkpoint node - Select the

control_v11f1p_sd15_openpose.pthmodel in the3Apply ControlNet node - Use Queue or the shortcut

Ctrl+Enterto run the workflow and generate images

Using ComfyUI ControlNet Auxiliary Preprocessors to Preprocess Reference Images

Unlike the workflow above, sometimes we don’t have a ready-made OpenPose image, so we need to use the ComfyUI ControlNet Auxiliary Preprocessors plugin to preprocess the reference image, then use the processed image as input along with the ControlNet model

Download the workflow above, drag it into ComfyUI or use the shortcut Ctrl+O to open this workflow file

Please download the image below and load it in the Load Image node

- Load the input image in the

1Load Image node - Select your installed model in the

2Load Checkpoint node - Select the

control_v11f1p_sd15_openpose.pthmodel in the3Apply ControlNet node - Use Queue or the shortcut

Ctrl+Enterto run the workflow and generate images

5.1 Main Nodes Explanation

ComfyUI Core nodes, core node documentation is available in the current website documentation

- Apply ControlNet node: Apply ControlNet

- Load ControlNet model: ControlNet Loader

5.2 ComfyUI ControlNet Auxiliary Preprocessors Nodes

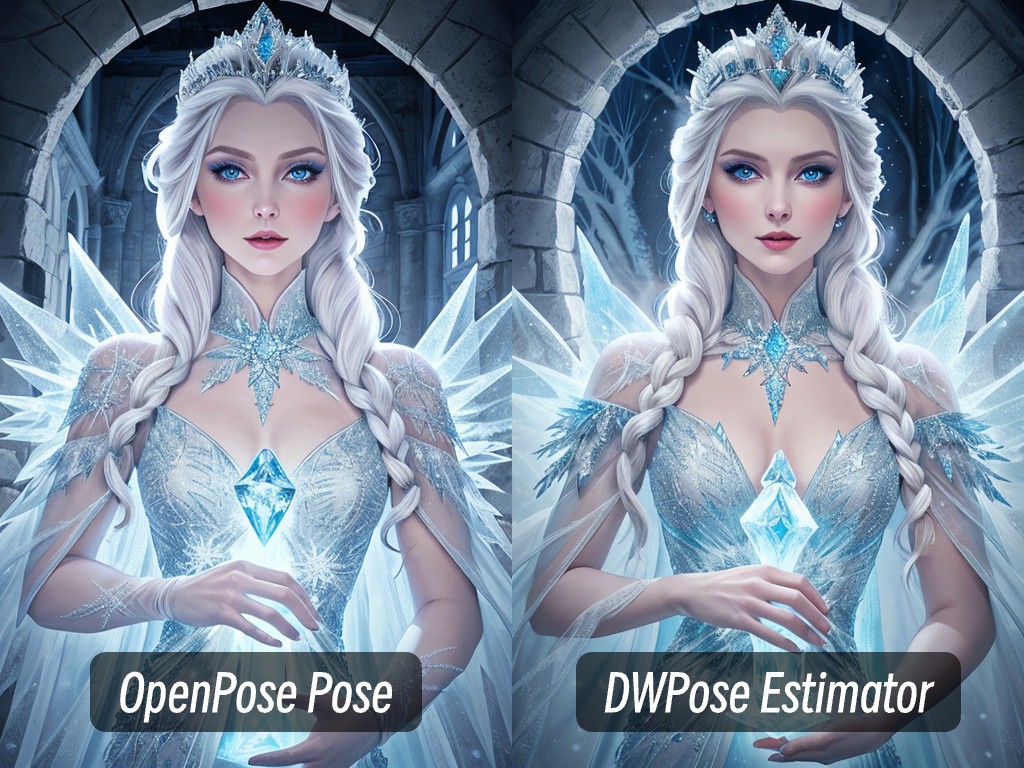

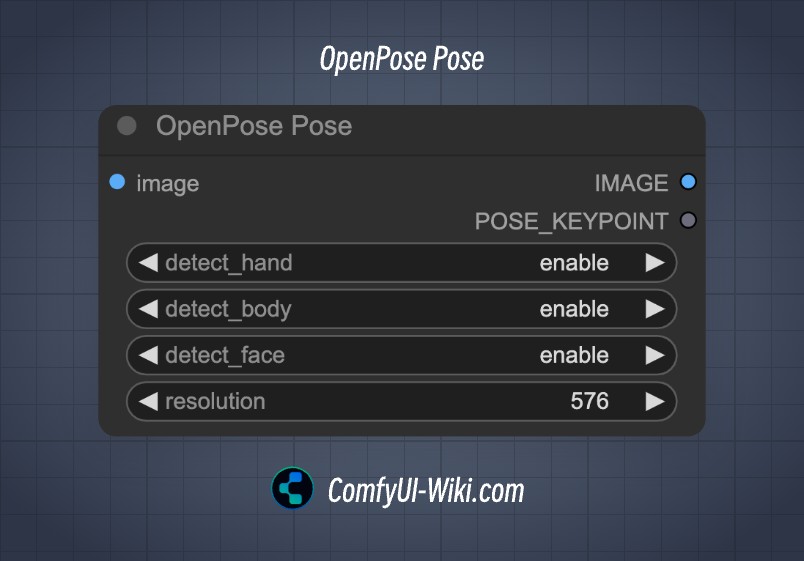

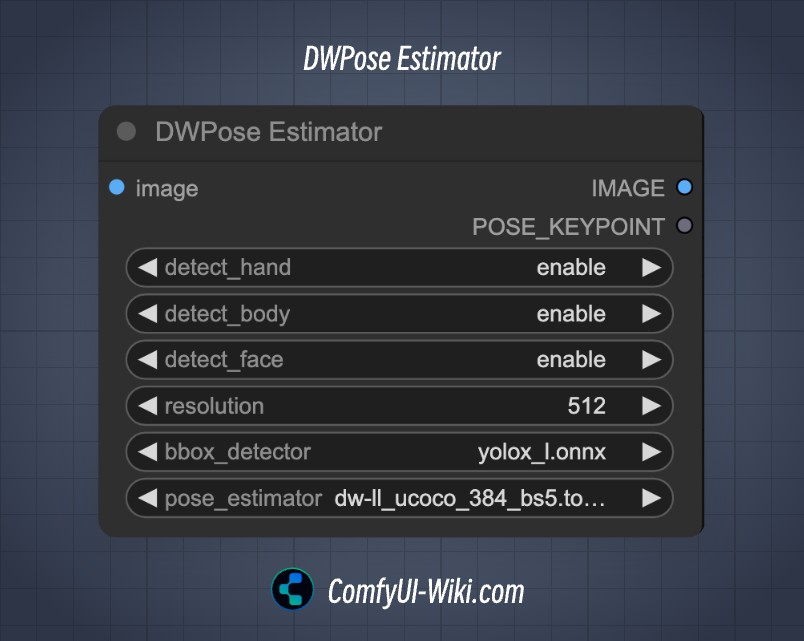

For Pose detection nodes, two different nodes are provided: OpenPose Pose node and DWPose Estimator node. Both are used to extract hand, body, and facial pose information from images and generate skeleton diagrams

The DWPose Estimator node is based on the DWPose pose detection algorithm, while the OpenPose Pose node is based on the OpenPose algorithm. In my provided workflow, I used the OpenPose Pose node, but you can try the DWPose Estimator node after successful execution to see the difference

OpenPose Pose Node

| Input Parameter | Description | Parameter Options |

|---|---|---|

| images | Input image | - |

| detect_hand | Whether to detect hands | enable / disable |

| detect_face | Whether to detect faces | enable / disable |

| detect_body | Whether to detect bodies | enable / disable |

| resolution | Output image resolution | - |

| Output Parameter | Description | Parameter Options |

|---|---|---|

| image | Processed output image | - |

| POSE_KEYPOINT | Skeleton points | - |

DWPose Estimator Node

| Input Parameter | Description | Parameter Options |

|---|---|---|

| images | Input image | - |

| detect_hand | Whether to detect hands | enable / disable |

| detect_face | Whether to detect faces | enable / disable |

| detect_body | Whether to detect bodies | enable / disable |

| resolution | Output image resolution | - |

| bbox-detector | Whether to detect body position in image | enable / disable |

| pose_estimator | Different pose detection methods | - |

| Output Parameter | Description | Parameter Options |

|---|---|---|

| image | Processed output image | - |

| POSE_KEYPOINT | Skeleton points | - |