Load LoRA

This node automatically detects models located in the LoRA folder (including subfolders) with the corresponding model path being ComfyUI\models\loras. For more information, please refer to Installing LoRA Models

The LoRA Loader node is primarily used to load LoRA models. You can think of LoRA models as filters that can give your images specific styles, content, and details:

- Apply specific artistic styles (like ink painting)

- Add characteristics of certain characters (like game characters)

- Add specific details to the image All of these can be achieved through LoRA.

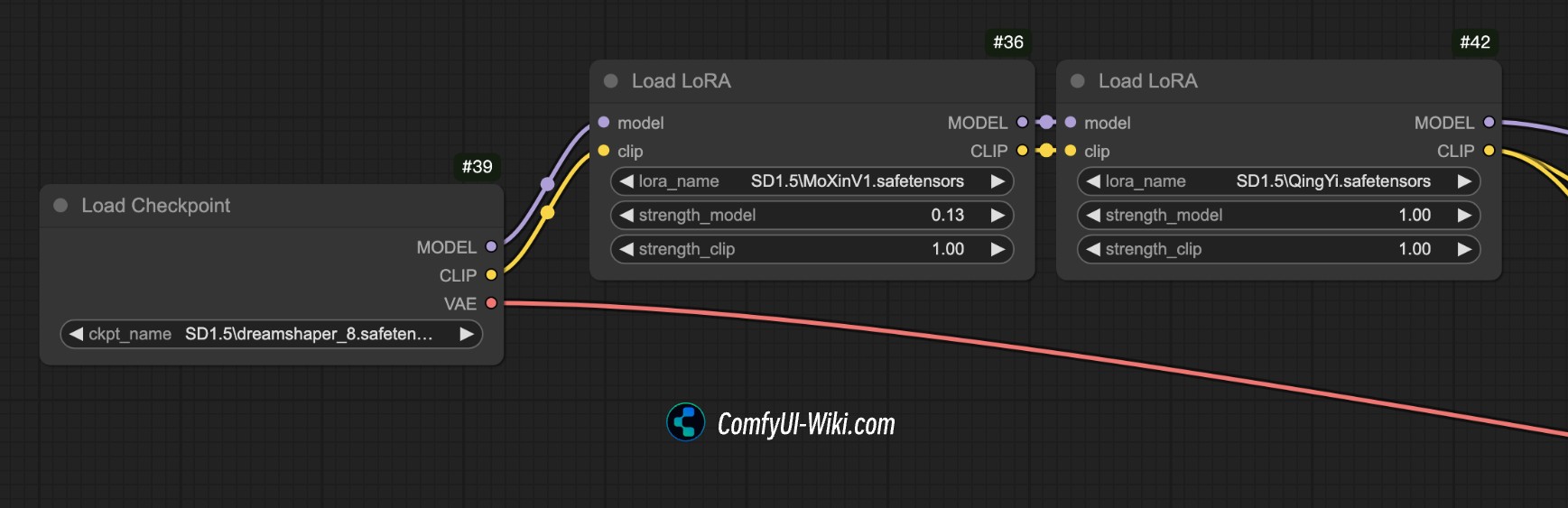

If you need to load multiple LoRA models, you can directly chain multiple nodes together, as shown below:

Input Types

| Parameter Name | Data Type | Function |

|---|---|---|

model | MODEL | Typically used to connect to the base model |

clip | CLIP | Typically used to connect to the CLIP model |

lora_name | COMBO[STRING] | Select the name of the LoRA model to use |

strength_model | FLOAT | Value range from -100.0 to 100.0, typically used between 0~1 for daily image generation. Higher values result in more pronounced model adjustment effects |

strength_clip | FLOAT | Value range from -100.0 to 100.0, typically used between 0~1 for daily image generation. Higher values result in more pronounced model adjustment effects |

Output Types

| Parameter Name | Data Type | Function |

|---|---|---|

model | MODEL | The model with LoRA adjustments applied |

clip | CLIP | The CLIP instance with LoRA adjustments applied |

Additional LoRA Information

-

LoRA Flexibility:

- LoRA can quickly generate specific style images by fine-tuning existing models without retraining the entire model. It’s an “artist’s tool” that allows for more precise control over image effects.

-

Resource Efficiency:

- Using LoRA models requires fewer computational resources, avoiding the time cost of large-scale model training. It only needs to fine-tune for target effects, making it both efficient and resource-saving.

-

Diverse Applications:

- LoRA can not only change character appearances but also adjust backgrounds, color tones, lighting, weather, and other elements, adding rich details to your work.

-

Customization and Compatibility:

- LoRA models support personalized customization, allowing you to create custom LoRA models based on needs and combine multiple LoRA models to achieve more complex effects.

-

Adaptation to Different Domains:

- LoRA is not limited to artistic styles and character features; it can also be used to adjust mood atmosphere, change lighting environments, add specific object details, and more.

Examples:

- Ink Painting Style: LoRA models can adjust color rendering and brush strokes to simulate the layered feel of ink paintings.

- Game Character Features: LoRA can adjust character details like clothing and hairstyles to create game character styles.

Usage Examples and Workflows

SD1.5 Basic LoRA Workflow ComfyUI Basic LoRA Workflow ComfyUI Official Original Workflow