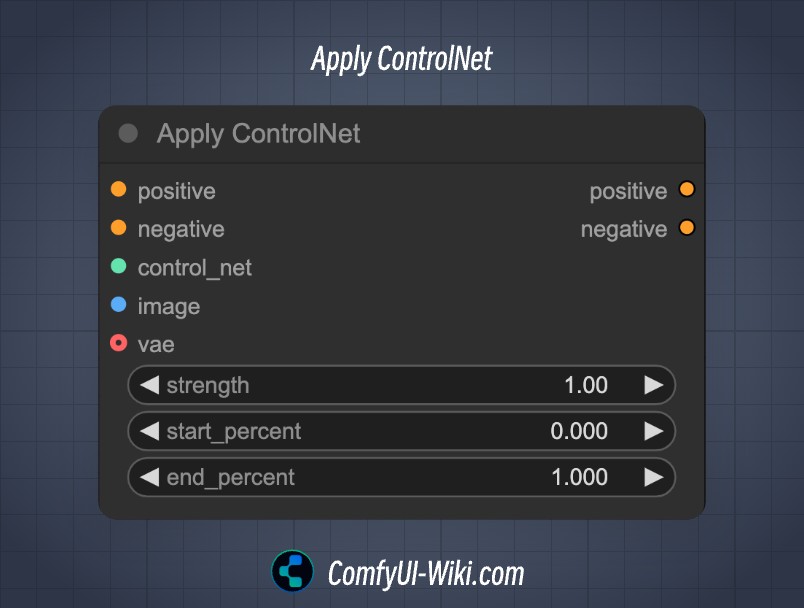

Apply ControlNet

This documentation is for the original Apply ControlNet(Advanced) node. The earliest Apply ControlNet node has been renamed to Apply ControlNet(Old). While you may still see the Apply ControlNet(Old) node in many workflow folders you download from comfyui.org for compatibility reasons, you can no longer find the Apply ControlNet(Old) node through search or node list. Please use the Apply ControlNet node instead.

This node applies a ControlNet to a given image and conditioning, adjusting the image’s attributes based on the control network’s parameters and specified strength, such as Depth, OpenPose, Canny, HED, etc.

Documentation

- Class name:

ControlNetApply - Category:

conditioning - Output node:

False

Using controlNet requires preprocessing of input images. Since ComfyUI initial nodes do not come with preprocessors and controlNet models, please first install ContrlNet preprocessors download preprocessors here and corresponding controlNet models.

Input Types

| Parameter | Data Type | Function |

|---|---|---|

positive | CONDITIONING | Positive conditioning data, from CLIP Text Encoder or other conditioning inputs |

negative | CONDITIONING | Negative conditioning data, from CLIP Text Encoder or other conditioning inputs |

control_net | CONTROL_NET | The controlNet model to apply, typically input from ControlNet Loader |

image | IMAGE | Image for controlNet application, needs to be processed by preprocessor |

vae | VAE | Vae model input |

strength | FLOAT | Controls the strength of network adjustments, value range 0 |

start_percent | FLOAT | Value 0.000~1.000, determines when to start applying controlNet as a percentage, e.g., 0.2 means ControlNet guidance will start influencing image generation at 20% of the diffusion process |

end_percent | FLOAT | Value 0.000~1.000, determines when to stop applying controlNet as a percentage, e.g., 0.8 means ControlNet guidance will stop influencing image generation at 80% of the diffusion process |

Output Types

| Parameter | Data Type | Function |

|---|---|---|

positive | CONDITIONING | Positive conditioning data processed by ControlNet, can be output to next ControlNet or K Sampler nodes |

negative | CONDITIONING | Negative conditioning data processed by ControlNet, can be output to next ControlNet or K Sampler nodes |

If you want to use T2IAdaptor style models, please use the Apply Style Model node instead

ComfyUI ControlNet Usage Examples

Visit the following pages for examples:

- ComfyUI OpenPose ControlNet Usage Example

- ComfyUI Depth ControlNet Usage Example

- ComfyUI Canny ControlNet Usage Example

- ComfyUI Multi ControlNet Usage Example

Related Resources

- Model Resources: controlNet Model Resources Download

- Preprocessor Plugin: ComfyUI ControlNet Auxiliary Preprocessors

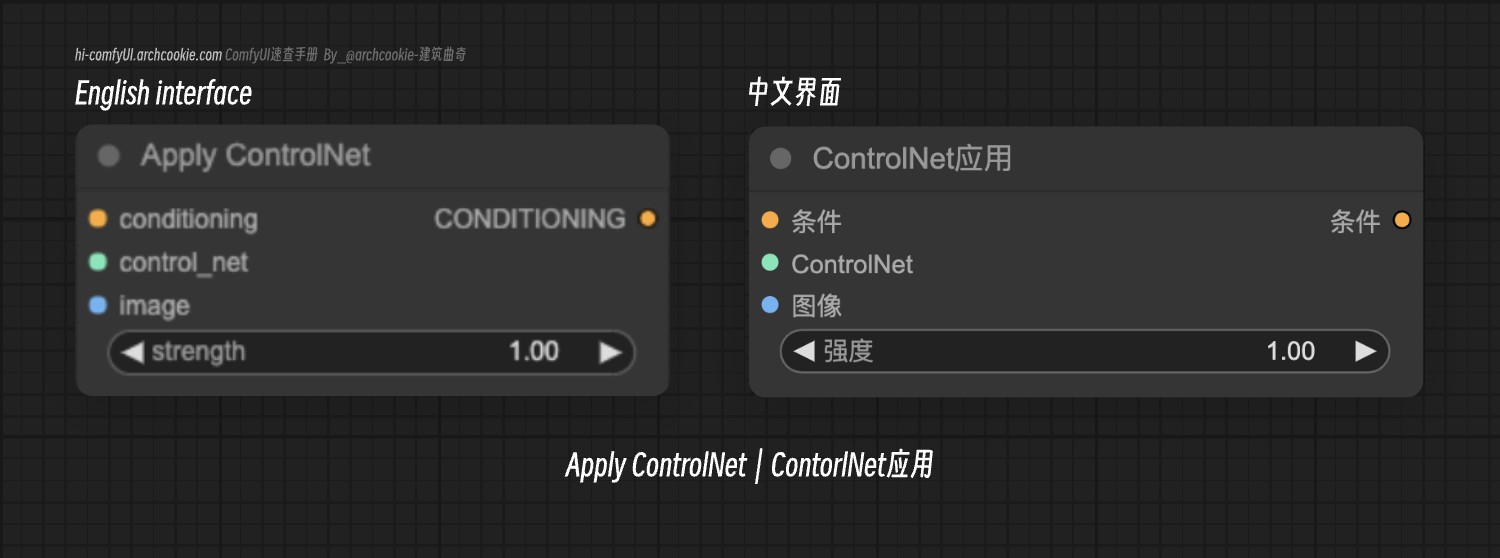

Apply ControlNet (OLD) Node Description

This is an early version of the Apply ControlNet node. The node options have been updated, but for compatibility, if you download workflows using the old version node in ComfyUI, it will display as this node. You can switch to the new Apply ControlNet node.

This is an early version of the Apply ControlNet node. The node options have been updated, but for compatibility, if you download workflows using the old version node in ComfyUI, it will display as this node. You can switch to the new Apply ControlNet node.

Apply ControlNet (OLD) Input Types

| Parameter | Data Type | Function |

|---|---|---|

conditioning | CONDITIONING | Conditioning data from CLIP Text Encoder or other conditioning inputs (such as input from another conditioning node) |

control_net | CONTROL_NET | The controlNet model to apply, typically input from ControlNet Loader |

image | IMAGE | Image for controlNet application, needs to be processed by preprocessor |

strength | FLOAT | Controls the strength of network adjustments, value range 0 |

Apply ControlNet (OLD) Output Types

| Parameter | Data Type | Function |

|---|---|---|

conditioning | CONDITIONING | Conditioning data processed by ControlNet, can be output to next ControlNet or K Sampler nodes |