Wan2.2 Animate represents a breakthrough in AI-driven character animation, offering a comprehensive solution for both character replacement and motion transfer within video content.

This advanced framework enables users to seamlessly animate static character images using reference videos, capturing intricate facial expressions and body movements with remarkable precision. Additionally, it can replace existing characters in videos while maintaining the original environmental context and lighting conditions.

Key Capabilities

- Dual Operation Modes: Seamlessly switch between character animation and video replacement functions

- Skeleton-Based Motion Control: Leverages advanced pose estimation for accurate movement replication

- Expression Fidelity: Preserves subtle facial expressions and micro-movements from source material

- Environmental Consistency: Maintains original video lighting and color grading for natural integration

- Extended Video Support: Iterative processing ensures smooth motion continuity across longer sequences

About Wan2.2 Animate workflow

In this tutorial we will include two workflows:

- ComfyUI Official Native Version (Core Nodes)

- Kijai ComfyUI-WanVideoWrapper version[To be updated]

Comfy Org live stream replay

Wan2.2 Animate ComfyUI Native Workflow (Core Nodes)

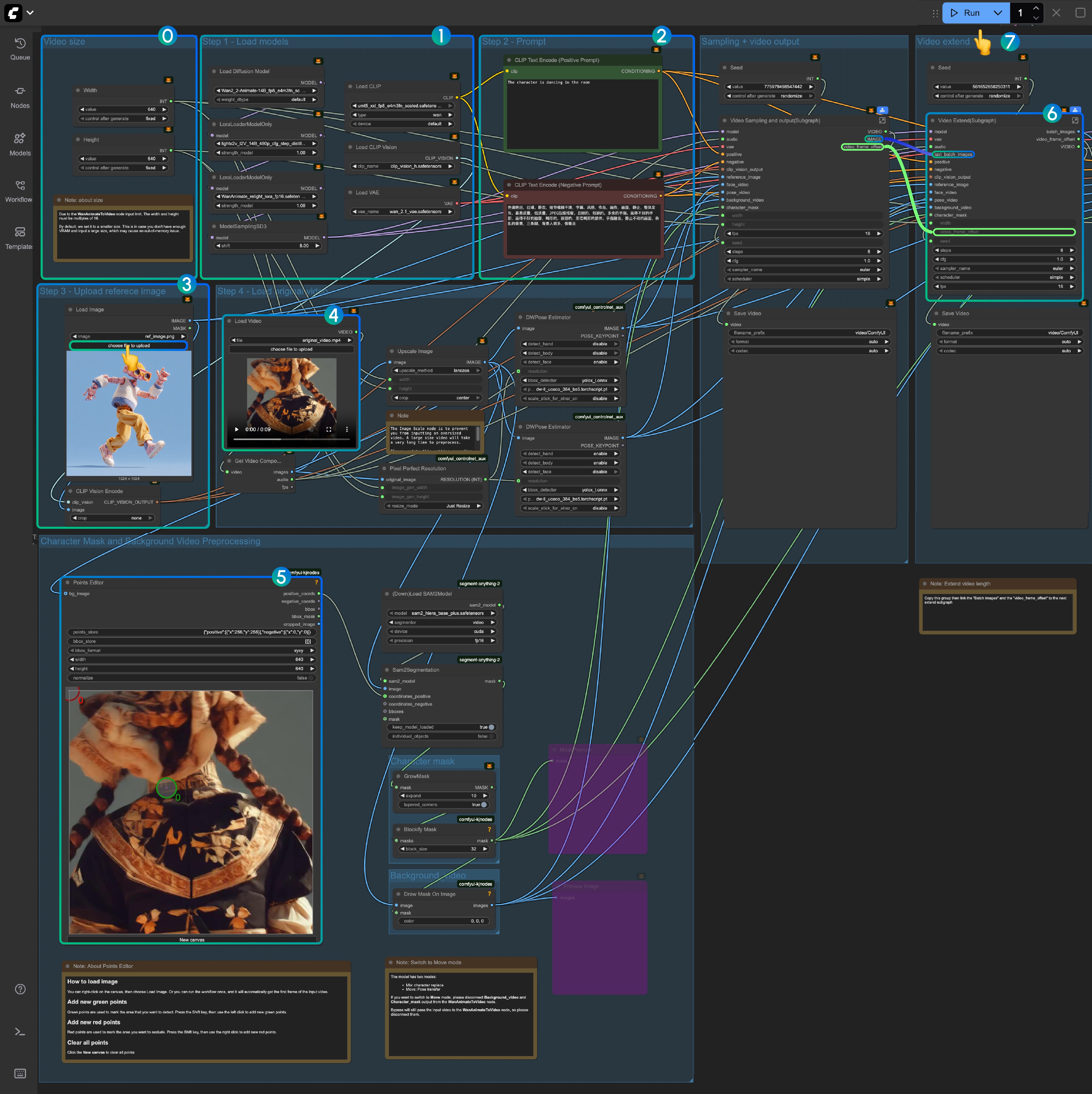

1. Workflow Setup

Begin by downloading the workflow file and importing it into ComfyUI.

Required Input Materials:

Reference Image:

Input Video

2. Model Downloads

Diffusion Models

- Wan2_2-Animate-14B_fp8_e4m3fn_scaled_KJ.safetensors - Optimized version from Kijai’s repository

- wan2.2_animate_14B_bf16.safetensors - Original model weights

CLIP Vision Models

LoRA Models

- lightx2v_I2V_14B_480p_cfg_step_distill_rank64_bf16.safetensors - 4-step acceleration LoRA

VAE Models

Text Encoders

ComfyUI/

├───📂 models/

│ ├───📂 diffusion_models/

│ │ ├─── Wan2_2-Animate-14B_fp8_e4m3fn_scaled_KJ.safetensors

│ │ └─── wan2.2_animate_14B_bf16.safetensors

│ ├───📂 loras/

│ │ └─── lightx2v_I2V_14B_480p_cfg_step_distill_rank64_bf16.safetensors

│ ├───📂 text_encoders/

│ │ └─── umt5_xxl_fp8_e4m3fn_scaled.safetensors

│ ├───📂 clip_visions/

│ │ └─── clip_vision_h.safetensors

│ └───📂 vae/

│ └── wan_2.1_vae.safetensors3. Custom Node Installation

For the complete workflow experience, install the following custom nodes using ComfyUI-Manager or manually:

Required Extensions:

For installation guidance, refer to How to install custom nodes

4. Workflow Configuration

Wan2.2 Animate operates in two distinct modes:

- Mix Mode: Character replacement within existing video content

- Move Mode: Motion transfer to animate static character images

4.1 Mix Mode Operation

Setup Guidelines:

-

Initial Testing: Use smaller video dimensions for first-time execution to verify VRAM compatibility. Ensure video dimensions are multiples of 16 due to

WanAnimateToVideolimitations. -

Model Verification: Confirm all required models are properly loaded

-

Prompt Customization: Modify text prompts as needed for your specific use case

-

Reference Image: Upload your target character image

-

Input Video Processing: Use provided sample videos initially. The DWPose Estimator from comfyui_controlnet_aux automatically processes input videos into pose and facial control sequences

-

Points Editor Configuration: The

Points Editorfrom KJNodes requires initial frame loading. Run the workflow once or manually upload the first frame -

Video Extension: The “Video Extend” group extends output length

- Each extension adds 77 frames (approximately 4.8 seconds)

- Skip for videos under 5 seconds

- For longer extensions, duplicate the group and connect

batch_imagesandvideo_frame_offsetoutputs sequentially

-

Execution: Click

Runor useCtrl(Cmd) + Enterto begin generation

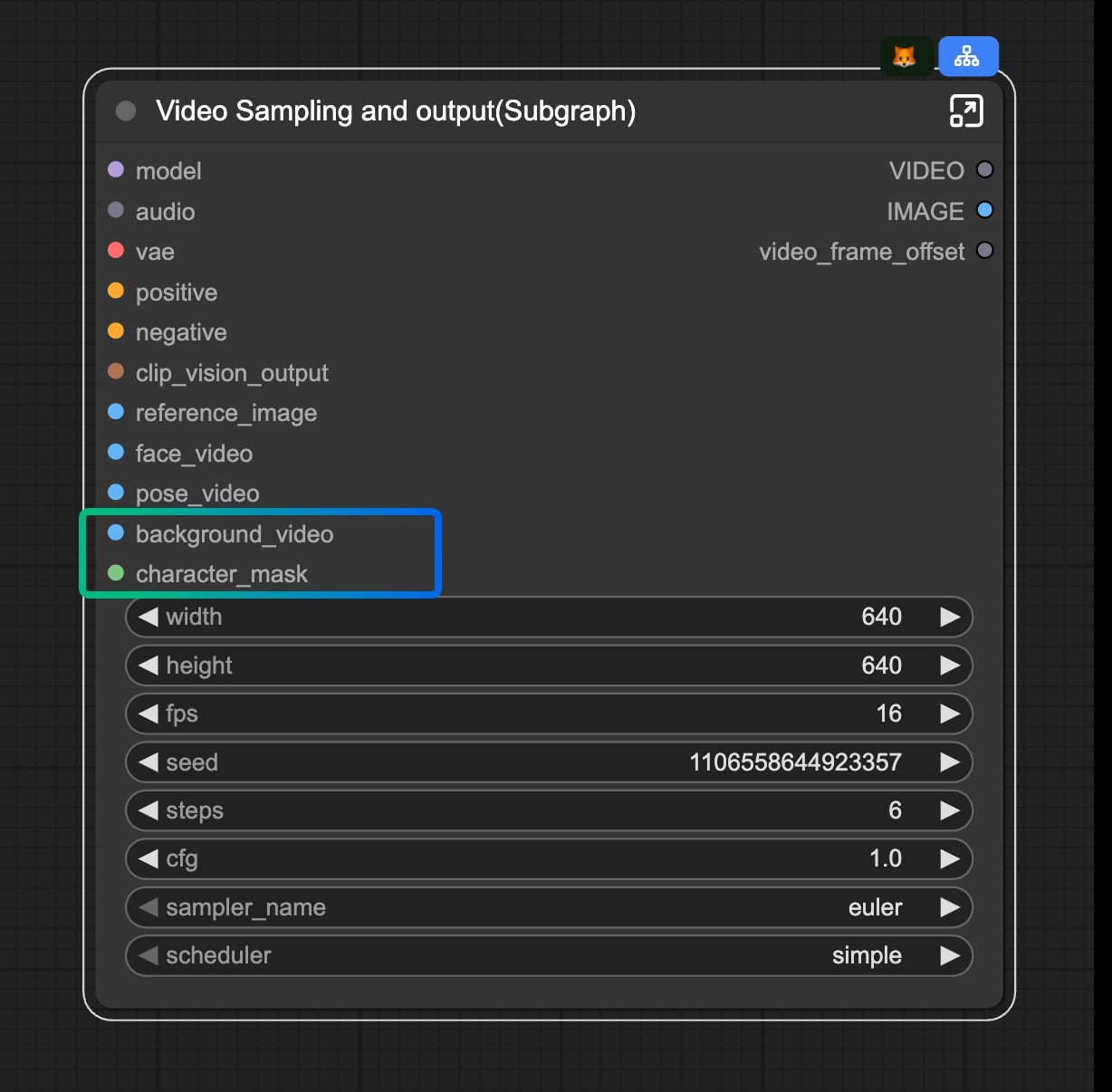

4.2 Move Mode Configuration

The workflow utilizes subgraph functionality for mode switching:

Mode Switching: To activate Move mode, disconnect the background_video and character_mask inputs from the Video Sampling and output(Subgraph) node.

Wan2.2 Animate ComfyUI-WanVideoWrapper Workflow

[To be update]