Flux ControlNet

This article will compile the currently available ControlNet models for the Flux ecosystem.

XLabs-AI/flux-controlnet-collections

XLabs-AI/flux-controlnet-collections is a collection of ControlNet checkpoints provided for the FLUX.1-dev model. This repository was developed by Black Forest Labs, aiming to provide more control options for the Flux ecosystem.

Main features:

-

Supports three ControlNet models:

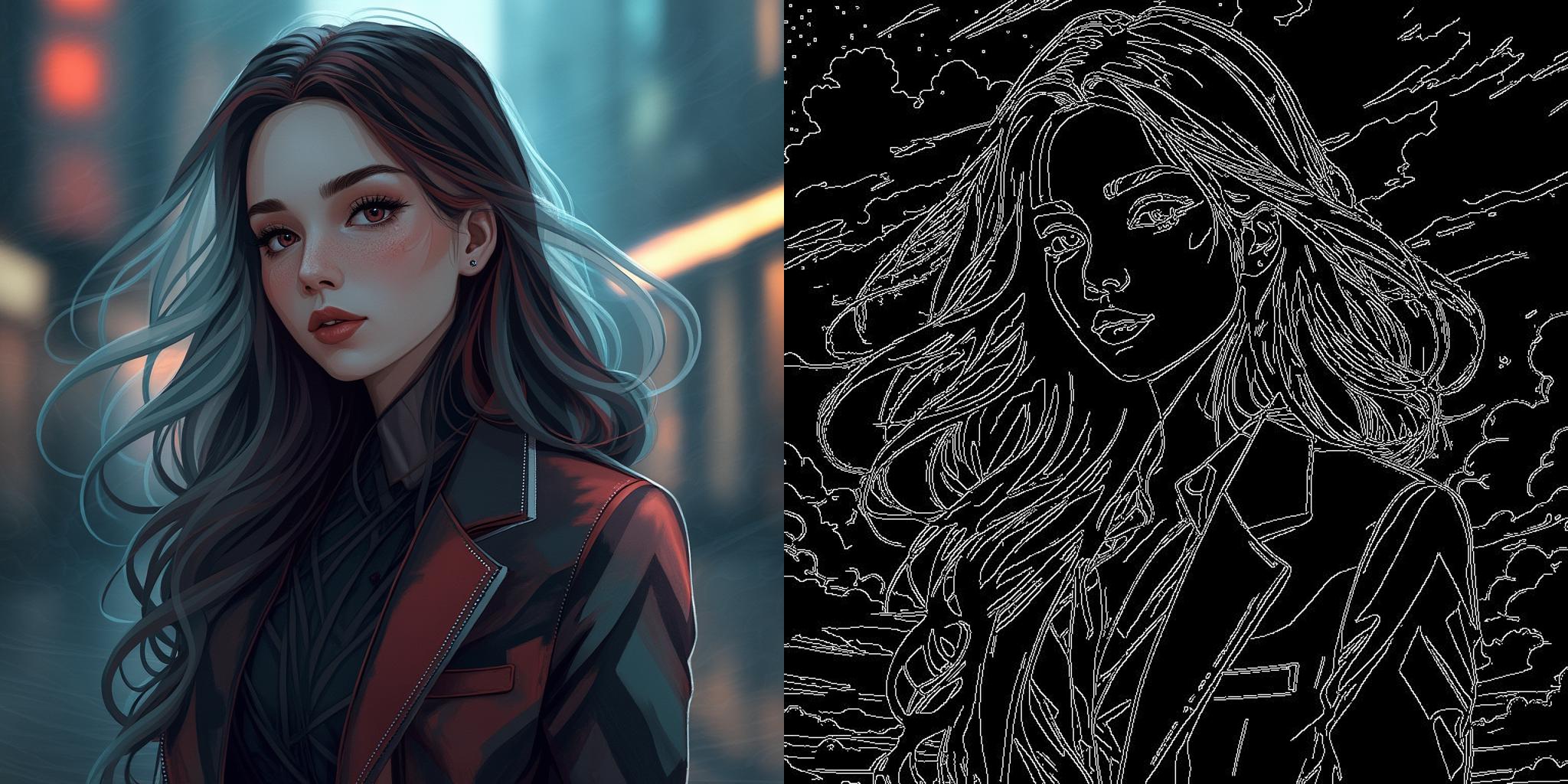

- Canny (edge detection)

- HED (edge detection)

- Depth (depth map, based on Midas)

-

All models are trained at 1024x1024 resolution, suitable for generating 1024x1024 resolution images.

-

Provides v3 version, which is an improved and more realistic version that can be used directly in ComfyUI.

-

Offers custom nodes and workflows for ComfyUI, making it easy for users to get started quickly.

-

Provides sample images and generation results, showcasing the model's effects.

Usage:

- Use through the official repository's main.py script.

- Use the provided custom nodes and workflows in ComfyUI, official workflow available at https://huggingface.co/XLabs-AI/flux-controlnet-collections/tree/main/workflows (opens in a new tab)

- Use the Gradio demo interface.

License:

These model weights follow the FLUX.1 [dev] non-commercial license.

Links:

- Model repository: https://huggingface.co/XLabs-AI/flux-controlnet-collections (opens in a new tab)

- GitHub repository: Contains ComfyUI workflows, training scripts, and inference demo scripts.

Here's a list of ControlNet models provided in the XLabs-AI/flux-controlnet-collections repository:

| Model Name | File Size | Upload Date | Description | Model Link | Download Link |

|---|---|---|---|---|---|

| flux-canny-controlnet.safetensors | 1.49 GB | August 30, 2023 | Canny edge detection ControlNet model (initial version) | View (opens in a new tab) | Download (opens in a new tab) |

| flux-canny-controlnet_v2.safetensors | 1.49 GB | August 30, 2023 | Canny edge detection ControlNet model (v2 version) | View (opens in a new tab) | Download (opens in a new tab) |

| flux-canny-controlnet-v3.safetensors | 1.49 GB | August 30, 2023 | Canny edge detection ControlNet model (v3 version) | View (opens in a new tab) | Download (opens in a new tab) |

| flux-depth-controlnet.safetensors | 1.49 GB | August 30, 2023 | Depth map ControlNet model (initial version) | View (opens in a new tab) | Download (opens in a new tab) |

| flux-depth-controlnet_v2.safetensors | 1.49 GB | August 30, 2023 | Depth map ControlNet model (v2 version) | View (opens in a new tab) | Download (opens in a new tab) |

| flux-depth-controlnet-v3.safetensors | 1.49 GB | August 30, 2023 | Depth map ControlNet model (v3 version) | View (opens in a new tab) | Download (opens in a new tab) |

| flux-hed-controlnet.safetensors | 1.49 GB | August 30, 2023 | HED edge detection ControlNet model (initial version) | View (opens in a new tab) | Download (opens in a new tab) |

| flux-hed-controlnet-v3.safetensors | 1.49 GB | August 30, 2023 | HED edge detection ControlNet model (v3 version) | View (opens in a new tab) | Download (opens in a new tab) |

This project provides powerful ControlNet models for the Flux ecosystem, allowing users to control the image generation process more precisely, especially suitable for applications that require image generation based on edge detection or depth information.

InstantX Flux Union ControlNet

InstantX Flux Union ControlNet is a versatile ControlNet model designed for FLUX.1 development version. This model integrates multiple control modes, allowing users to control the image generation process more flexibly.

Main features:

-

Multiple control modes: Supports various control modes, including Canny edge detection, Tile, depth map, blur, pose control, etc.

-

High performance: Most control modes have achieved high effectiveness, especially Canny, Tile, depth map, blur, and pose control modes.

-

Continuous optimization: The development team is constantly improving the model to enhance its performance and stability.

-

Compatibility: Fully compatible with the FLUX.1 development version base model, easily integrating into existing FLUX workflows.

-

Multi-control inference: Supports the simultaneous use of multiple control modes, providing users with more fine-grained image generation control.

Usage:

-

Single control mode:

- Load the Union ControlNet model

- Select the desired control mode (e.g., Canny, depth map, etc.)

- Set the control image and related parameters

- Generate the image

-

Multi-control mode:

- Load the Union ControlNet model as FluxMultiControlNetModel

- Set different control images and parameters for each control mode

- Apply multiple control modes simultaneously to generate the image

Notes:

- The current version is a beta version and may not be fully trained yet, so you may encounter some imperfections during use.

- Some control modes (such as grayscale control) may have lower effectiveness, so it's recommended to prioritize high-effectiveness modes.

- Compared to specialized single-function ControlNet models, the Union model may perform slightly less well on certain specific tasks, but it offers greater flexibility and versatility.

Resource links:

- Model download: InstantX/FLUX.1-dev-Controlnet-Union-alpha (opens in a new tab)

| File name | Size | View link | Download link |

|---|---|---|---|

| diffusion_pytorch_model.safetensors | 6.6 GB | View (opens in a new tab) | Download (opens in a new tab) |

Note: After downloading the model, it's recommended to rename the file to a more descriptive name, such as "flux_union_controlnet.safetensors", for easier file management and identification in the future.

This Union ControlNet model provides a powerful and flexible image control tool for the FLUX ecosystem, especially suitable for users who need to implement multiple control functions in a single model. With continuous optimization and updates, it has the potential to become one of the most comprehensive and powerful ControlNet models on the FLUX platform.

InstantX Flux Canny ControlNet

In addition to the Union ControlNet model, InstantX also provides a ControlNet model specifically for Canny edge detection. This model focuses on using the Canny edge detection algorithm to control the image generation process, providing users with more precise edge control capabilities.

Main features:

-

Focus on Canny edge detection: This model is specifically optimized for Canny edge detection, allowing for better processing and utilization of edge information.

-

High-resolution training: The model was trained in a multi-scale environment with a total pixel count of 10241024, using a batch size of 88 for 30k steps.

-

Compatible with FLUX.1: Designed for the FLUX.1 development version, it can seamlessly integrate into FLUX workflows.

-

Uses bfloat16 precision: The model uses bfloat16 precision, which can improve computational efficiency while maintaining accuracy.

Usage:

- Install the latest version of the Diffusers library.

- Load the Flux Canny ControlNet model and FLUX.1 base model.

- Prepare the input image and apply Canny edge detection.

- Use the detected edges as control input to generate the image.

Jasperai Flux.1-dev ControlNets Series

Jasperai has developed a series of ControlNet models for Flux.1-dev, designed to provide more precise control for AI image generation. This series includes surface normal, depth map, and super-resolution models, offering users a diverse set of creative tools.

You can view detailed information about these models on the Jasperai collection page on Hugging Face (opens in a new tab).

1. Surface Normal ControlNet Model

The Surface Normal ControlNet Model (opens in a new tab) uses surface normal maps to guide image generation. This model is specifically optimized for surface normal information, allowing for better processing and utilization of object surface geometric information.

Main features:

- Focuses on surface normal processing

- Provides precise geometric information of object surfaces

- Enhances image depth perception and realism

- Compatible with FLUX.1 development version

2. Depth Map ControlNet Model

The Depth Map ControlNet Model (opens in a new tab) uses depth information to control image generation. This model is specifically optimized for depth map information, allowing for better understanding and utilization of scene spatial structure information.

Main features:

- Focuses on depth map processing

- Provides spatial structure information of scenes

- Improves image perspective and spatial sense

- Compatible with FLUX.1 development version

3. Super-resolution ControlNet Model

The Super-resolution ControlNet Model (opens in a new tab) focuses on improving the quality of low-resolution images. This model can convert low-quality images into high-resolution versions, reconstructing and enhancing image details.

Main features:

- Focuses on image super-resolution processing

- Converts low-resolution images to high-resolution versions

- Reconstructs and enhances image details

- Compatible with FLUX.1 development version

These models provide more precise control for AI image generation, allowing creators to generate more realistic and detailed images. Each model is designed for specific image processing needs, offering users a diverse set of creative tools. Users can choose the appropriate model based on their needs to achieve different image generation effects.